How to Connect an AI Model to MySQL in 4 Steps

AI is fast becoming an integral part of all kinds of development projects. At the most basic level, this requires us to know how to connect various elements of our applications to AI tools and models.

As you might expect, interactions between our app鈥檚 data layer and LLMs are probably the most important component to this.

The challenge is that this can take a number of different forms. This depends on what kind of data we鈥檙e using, our use case, and how widespread or varied the interactions we require are.

Today, we鈥檙e examining one of the most common examples of this by checking out how we can connect MySQL to AI models.

More specifically, we鈥檒l be covering:

- Why would we connect MySQL to an LLM

- Options for connecting AI models and databases

- Building an AI-powered incident report form with MySQL and OpenAI

Let鈥檚 jump right in.

Why would we connect MySQL to an LLM?

For over thirty years, MySQL has been at the center of all kinds of applications, from large-scale enterprise solutions to hobbyist projects.

This ubiquity means that, at some point, there鈥檚 a good chance you鈥檙e going to need to connect it to an LLM.

A large part of MySQL’s popularity comes from its reputation for performance, ease of use, and scalability. It鈥檚 also open-source, making it free to use and distribute.

However, in recent years, MySQL has lost a certain amount of ground to other database options, including Postgres and a variety of NoSQL tools. These may be better positioned for many AI use cases, including offering more flexibility and support for unstructured data.

Despite this, MySQL still powers a huge number of production systems, and it remains a popular choice for developers that need a fast, reliable way to implement a SQL database.

Some of the most common scenarios where we might need to connect an LLM to MySQL include introducing AI capabilities to existing workflows, legacy app modernization, data lookup and retrieval, and more.

You might also like our guide to agentic AI workflows .

Options for connecting AI models and databases

As we said earlier, one area where this gets difficult is that there are several different ways that we can go about connecting databases and models. Which of these is suitable depends on our requirements, use case, and technical skills and resources.

Often, what this comes down to is the scale and complexity of the interactions we require. Naturally, there鈥檚 often a tradeoff between the complexity of a solution and the development resources we need to implement it.

So, for some use cases, such as digital workers or enterprise chatbots , we may need an AI system to be able to autonomously determine which database actions are required in a given context and then execute them.

Generally, this will rely on agentic AI frameworks, such as LangChain. We can think of these as toolkits for building complex AI systems that can act independently in order to achieve a given goal.

Often, in these cases, integrations are handled with a Model Protocol Context (MCP) server. This offers a secure, standardized way to connect models to a range of tools and functions, so systems can determine for themselves which actions are appropriate in specific contexts.

However, just as many real-world solutions rely on more traditional methods to connect SQL databases to AI models.

This means defining logic or events using automation tools, and then passing data to an LLM in response to this via a HTTP request, populating values in a predefined prompt. This can be hard-coded, or it may rely on visual automation tools.

Essentially, this resembles a traditional workflow automation solution, but leverages an LLM for some element of processing, transformation, or decision-making.

Example use cases include things like translation, categorization, performing calculations, data enrichment, and more, within existing workflows.

Building an AI-powered incident report form with MySQL and OpenAI

To better understand how we can connect an LLM to MySQL, we鈥檙e going to build out an example of how we can achieve this in 大象传媒.

More specifically, we鈥檙e going to use 大象传媒鈥檚 OpenAI automation action alongside a MySQL database to create an AI-powered form for reporting IT incidents.

Each time a new incident report is submitted, our AI system will use the provided data to triage it, determine an impact level according to defined business rules, and update the database row with this enriched information.

We鈥檒l do this in only four steps:

- Connecting our MySQL database

- Configuring our OpenAI integration

- Building an AI-powered automation rule

- Creating an incident report form UI

If you haven鈥檛 already, sign up for a free 大象传媒 account to start building as many applications as you need.

Join 200,000 teams building workflow apps with 大象传媒

Note that today, we鈥檙e using a self-hosted instance of 大象传媒, but you can also take advantage of a range of AI features alongside our internal database within our cloud platform.

We鈥檒l provide the queries you need to create a look-alike database a little later, so that you can build along with this tutorial.

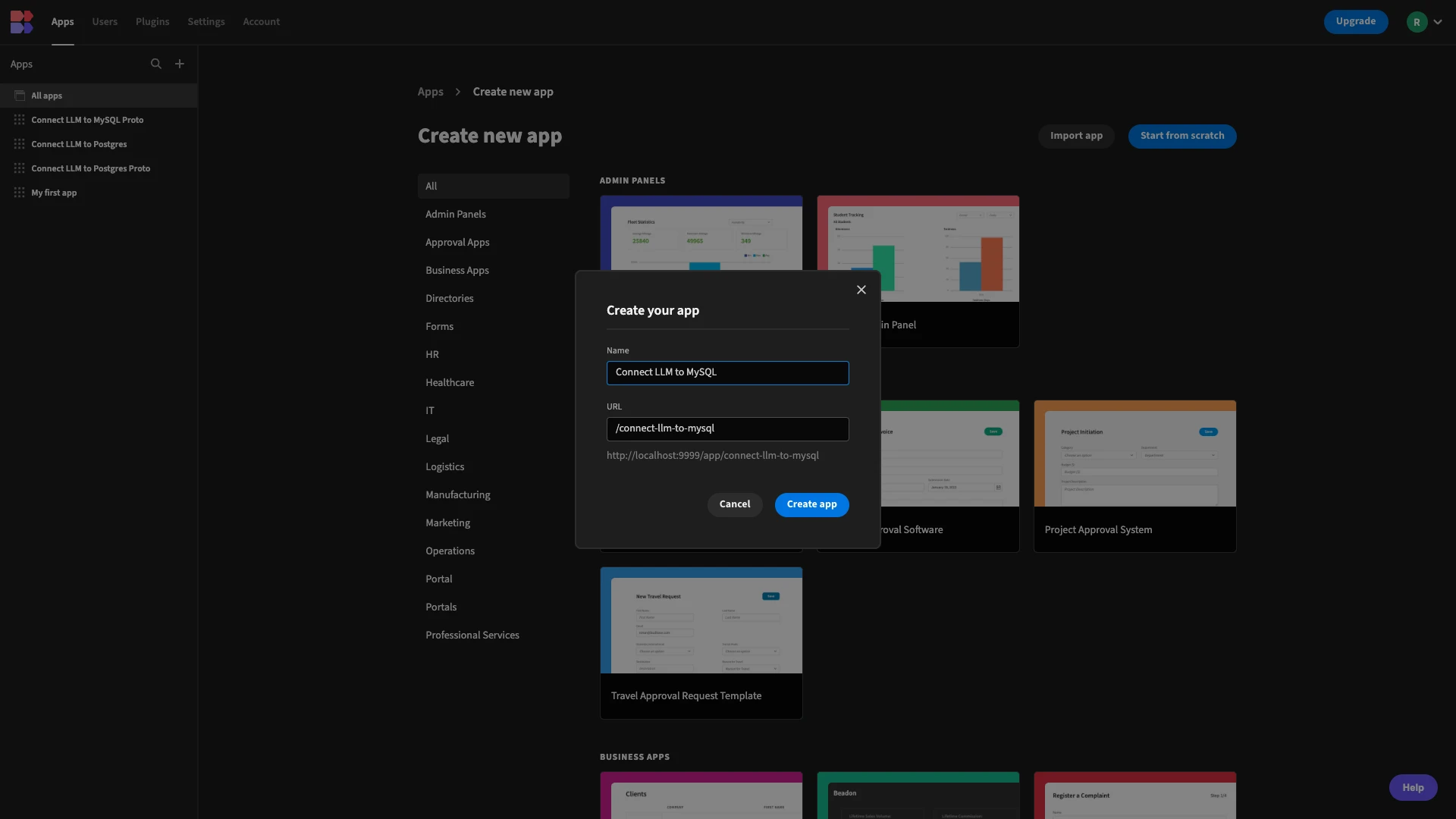

The first thing we need to do is create a new application project. We have the options of using a pre-built template or importing an existing app export, but today we鈥檙e starting from scratch.

When we choose this option, we鈥檒l be prompted to give our new app a name. This will also be used to generate a URL extension. For demo purposes, we鈥檙e simply going with Connect LLM to MySQL.

When we create a new 大象传媒 app, it will load with sample data and UIs. We won鈥檛 use these today, so we can simply delete them.

1. Connecting our MySQL database

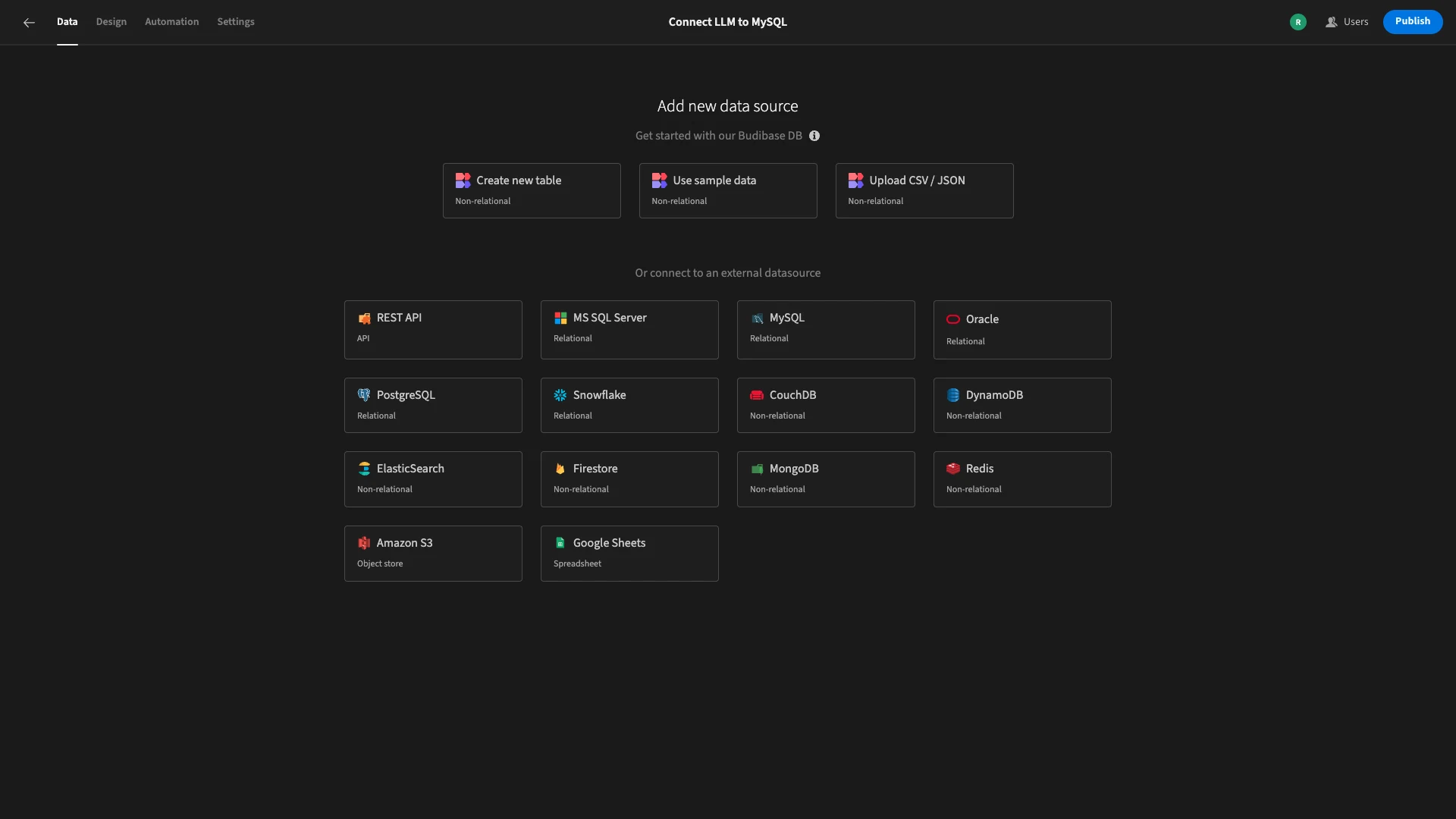

Once we鈥檝e done this, we鈥檒l be prompted to choose a data source for our app. 大象传媒 offers dedicated connectors for a range of RDBMSs, NoSQL tools, spreadsheets, and APIs, acting as a proxy to query data, without storing it.

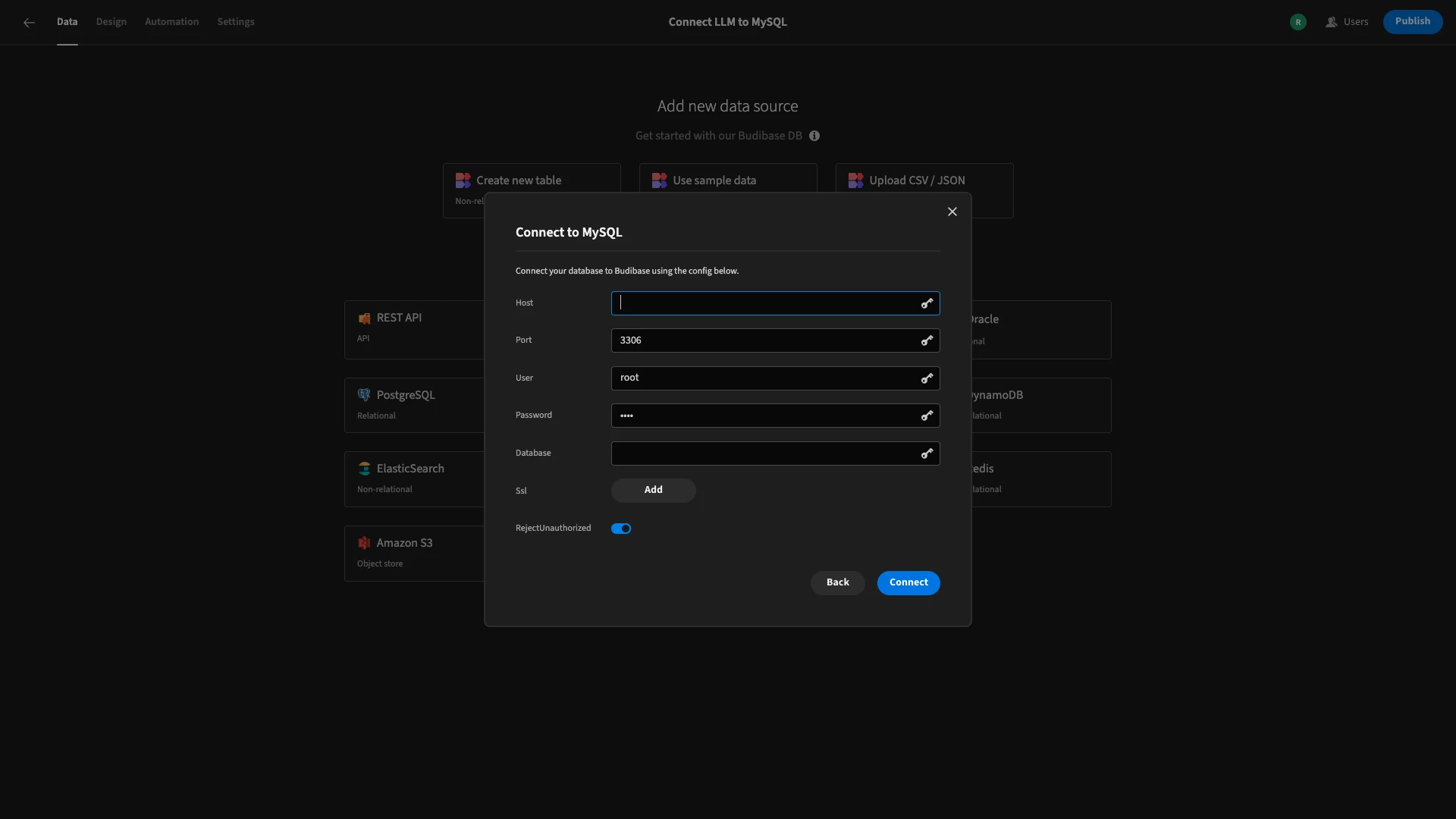

Today, we鈥檙e choosing MySQL, although the process for adding an alternative database is largely similar. When we choose this option, we鈥檒l be presented with the following modal, where we can add our configuration details.

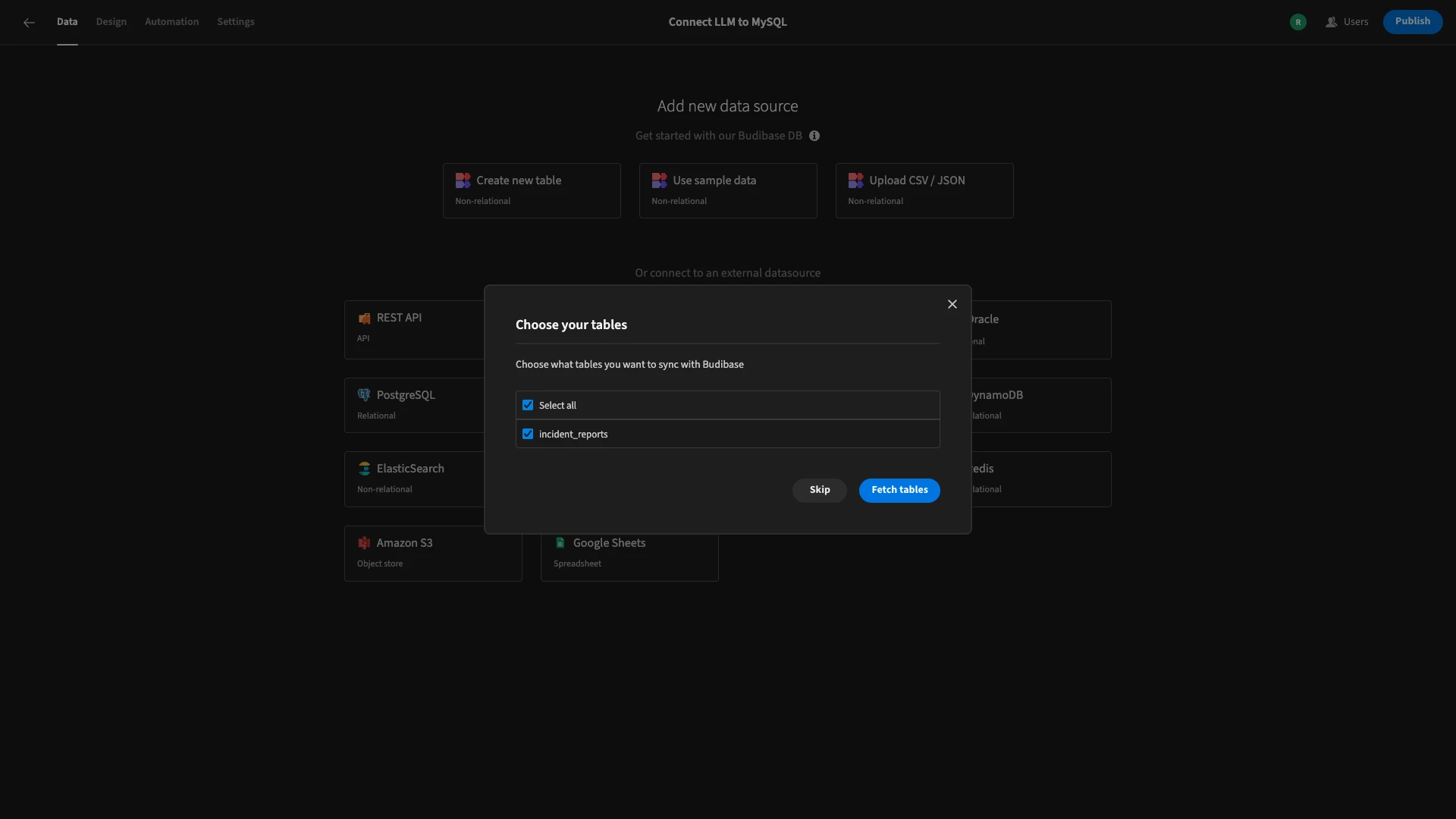

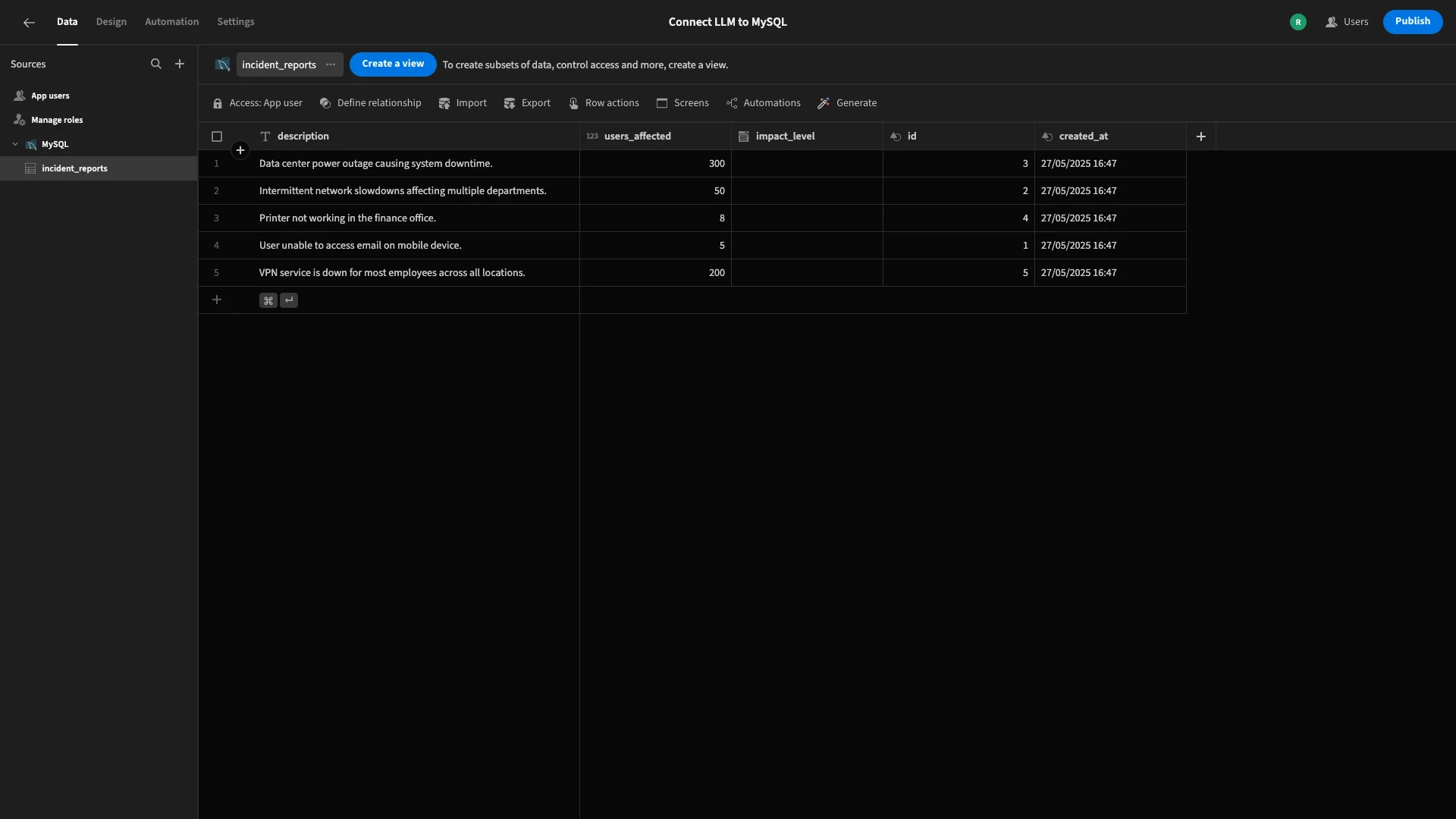

We鈥檙e then asked which of our database鈥檚 tables we鈥檇 like to Fetch, making them queryable within 大象传媒. We鈥檙e selecting our database鈥檚 one and only table, incident_reports.

Here鈥檚 how this will look in 大象传媒鈥檚 Data section.

Already, we can use the spreadsheet-like interface to edit values or make key changes such as enforcing access control rules.

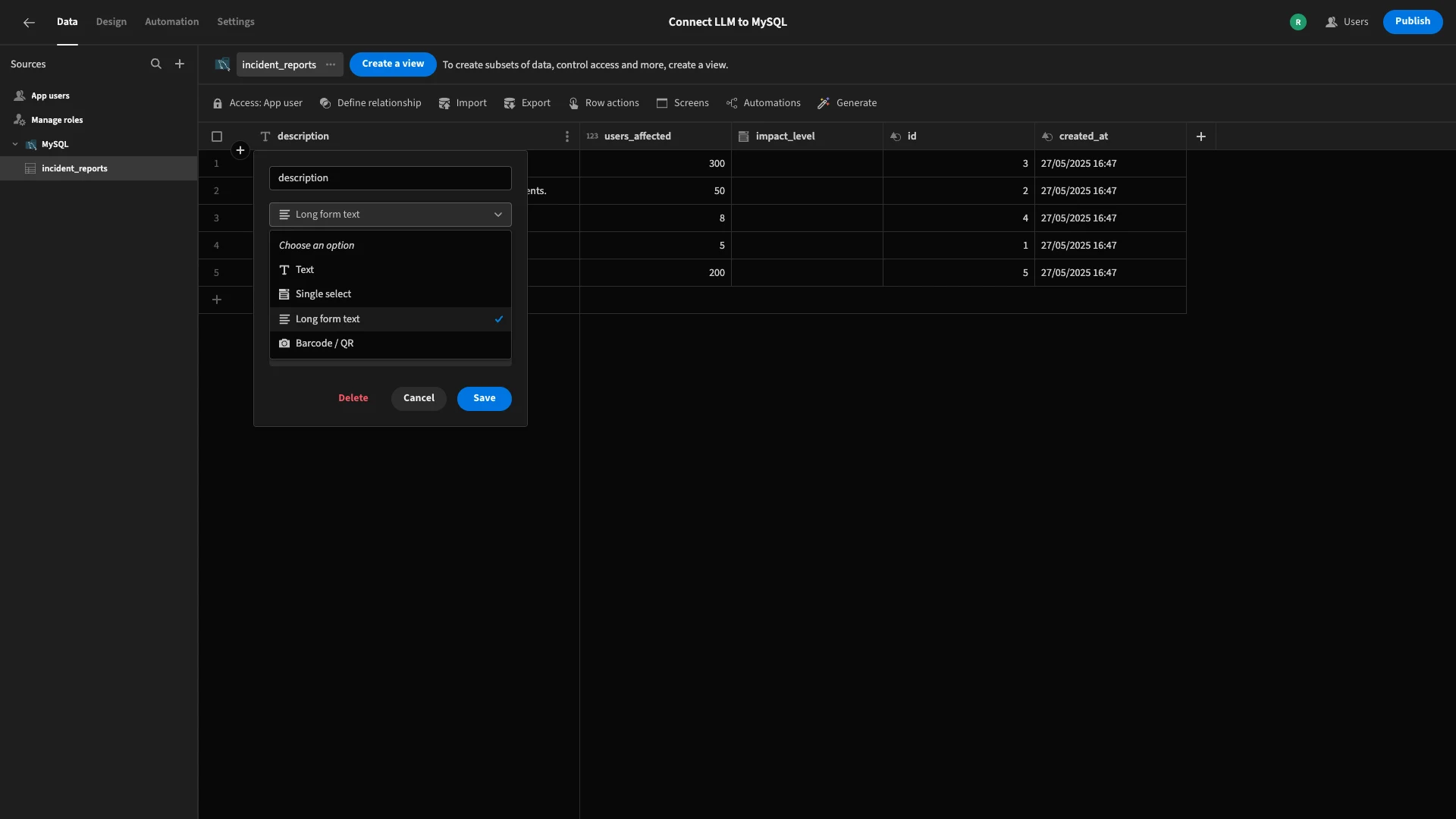

Today, we鈥檙e going to make one small change in the Data section that will make our life a little easier when it comes time to autogenerate a form UI.

Specifically, our description field currently has the Text type. We鈥檙e going to update this to Long Form Text, changing how 大象传媒 handles it, in order to provide more space to record incident details in our end-user app.

Of the other columns, id and created_at will be automatically generated by the database when a row is added, while description and users_affected are the fields we鈥檒l populate with user-submitted data.

So, that鈥檚 our data model ready to go.

2. Configuring our OpenAI integration

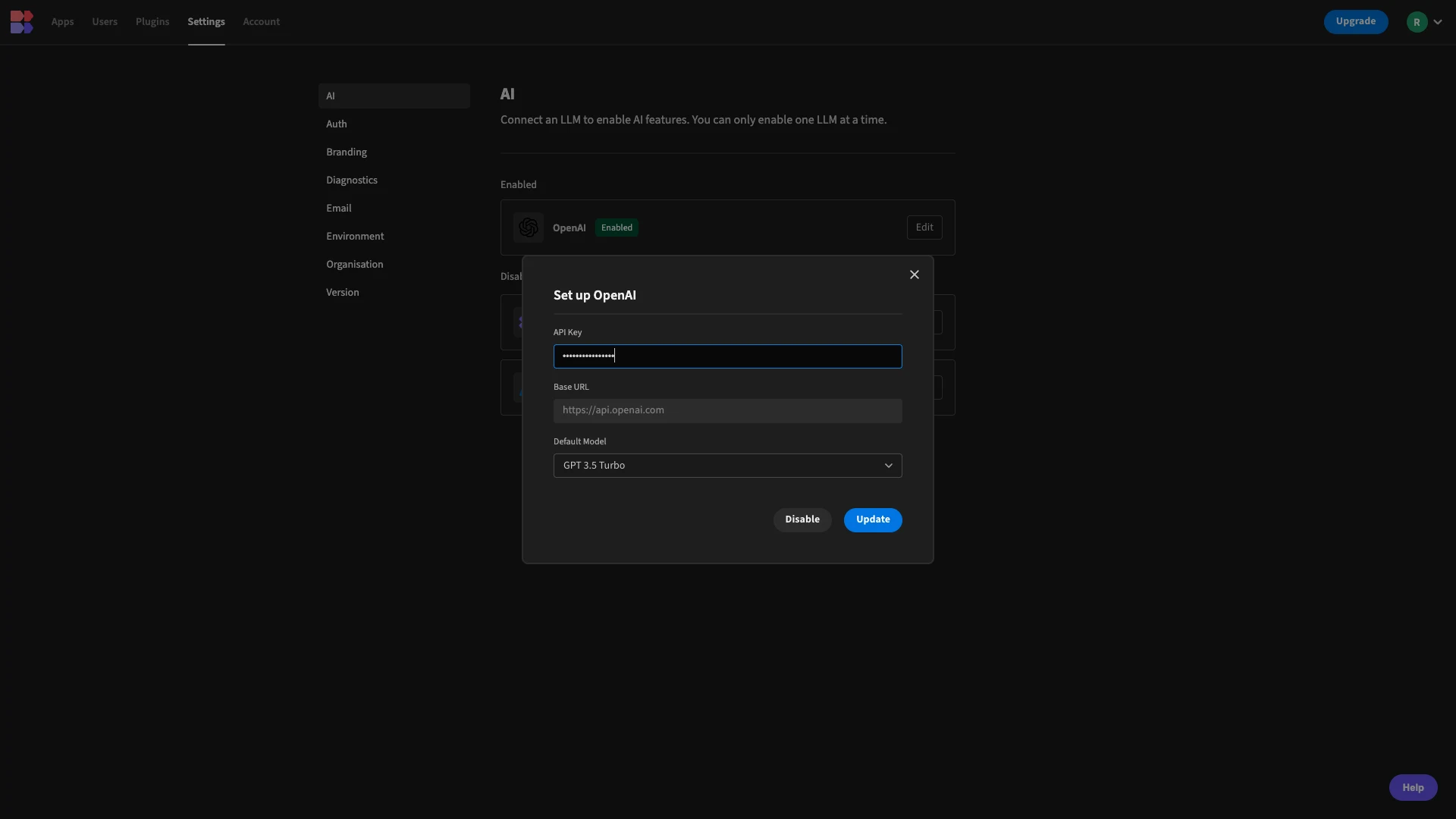

Next, we need to do a little bit of configuration to access 大象传媒鈥檚 OpenAI integration. 大象传媒 offers connectivity for LLMs, including OpenAI and Azure, as well as custom AI configs for enterprise customers.

To set this up, we need to exit our app project and head to the Settings tab within the 大象传媒 portal.

Here, we鈥檙e presented with the AI sub-menu, where we can enter configuration details for different models.

To enable a connection to OpenAI, we can hit edit. We鈥檙e then shown a dialog where we can enter our API key and select a model. For demo purposes, we鈥檒l use GPT-3.5 Turbo.

And that鈥檚 all we need to do.

3. Building an AI-powered automation rule

Now, we鈥檙e ready to start building the automation logic that will connect our LLM to our MySQL database.

Here鈥檚 a quick overview of how this will work:

- The automation is triggered when a new row is added to the

incident_reportstable. - The

descriptionandusers_affectedvalues will dynamically be added to a pre-written prompt containing instructions for the LLM on how to determine a value for theimpact_levelcolumn. - We鈥檒l update the original trigger row, using the returned value to populate the

impact_levelcolumn.

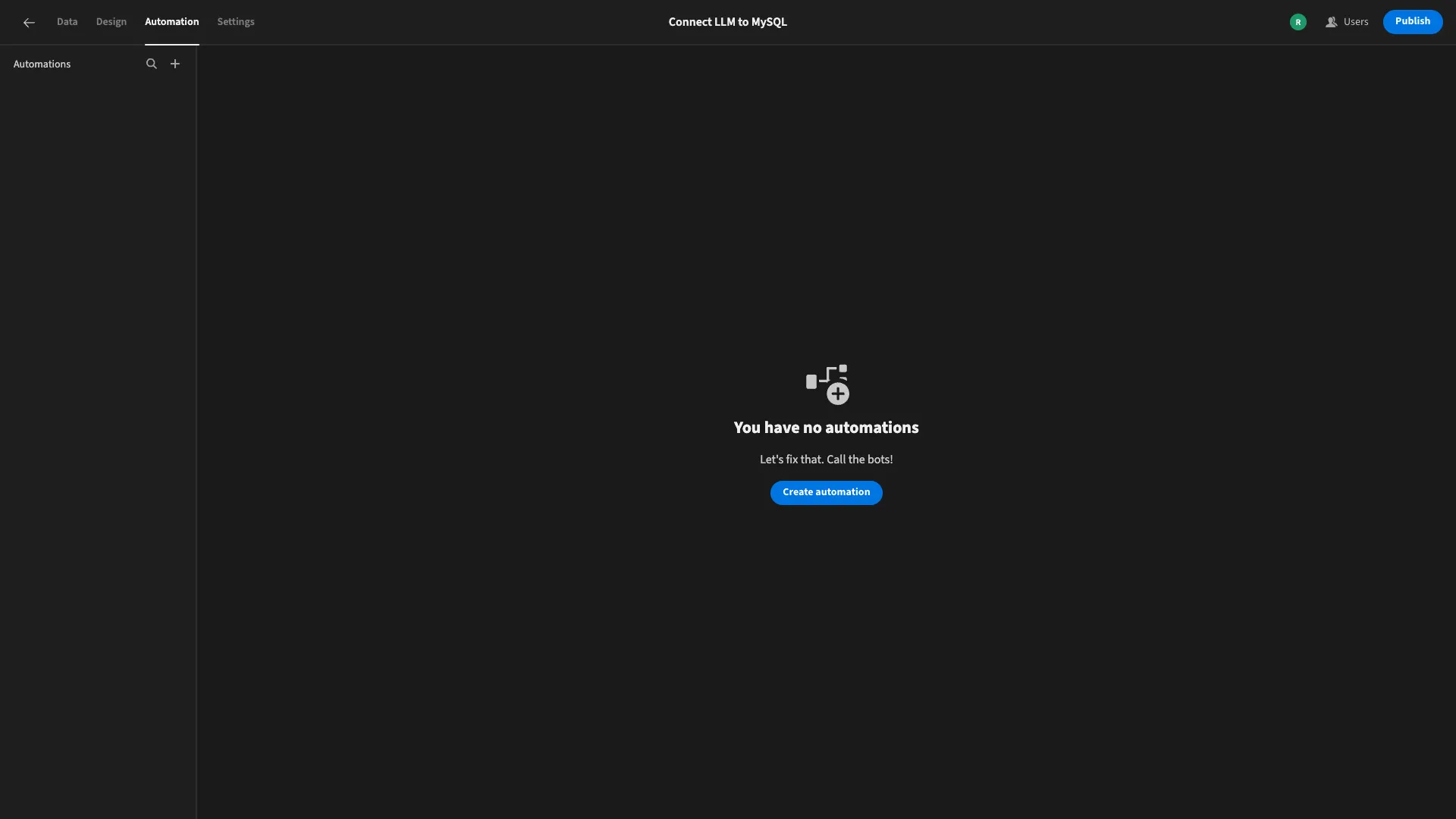

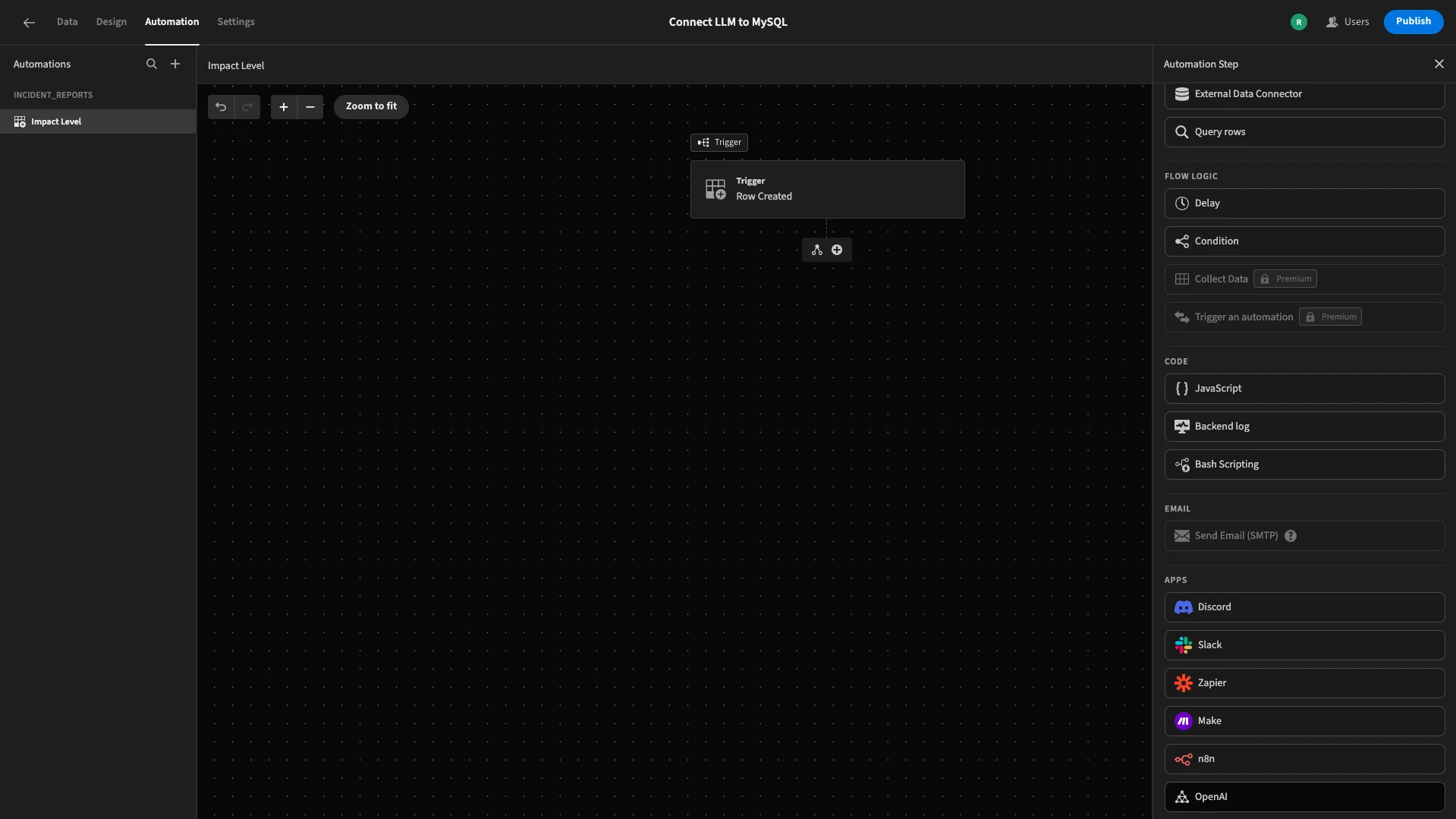

The first thing we need to do is head to 大象传媒鈥檚 Automation section. Here, we鈥檒l see a CTA to build our first automation flow.

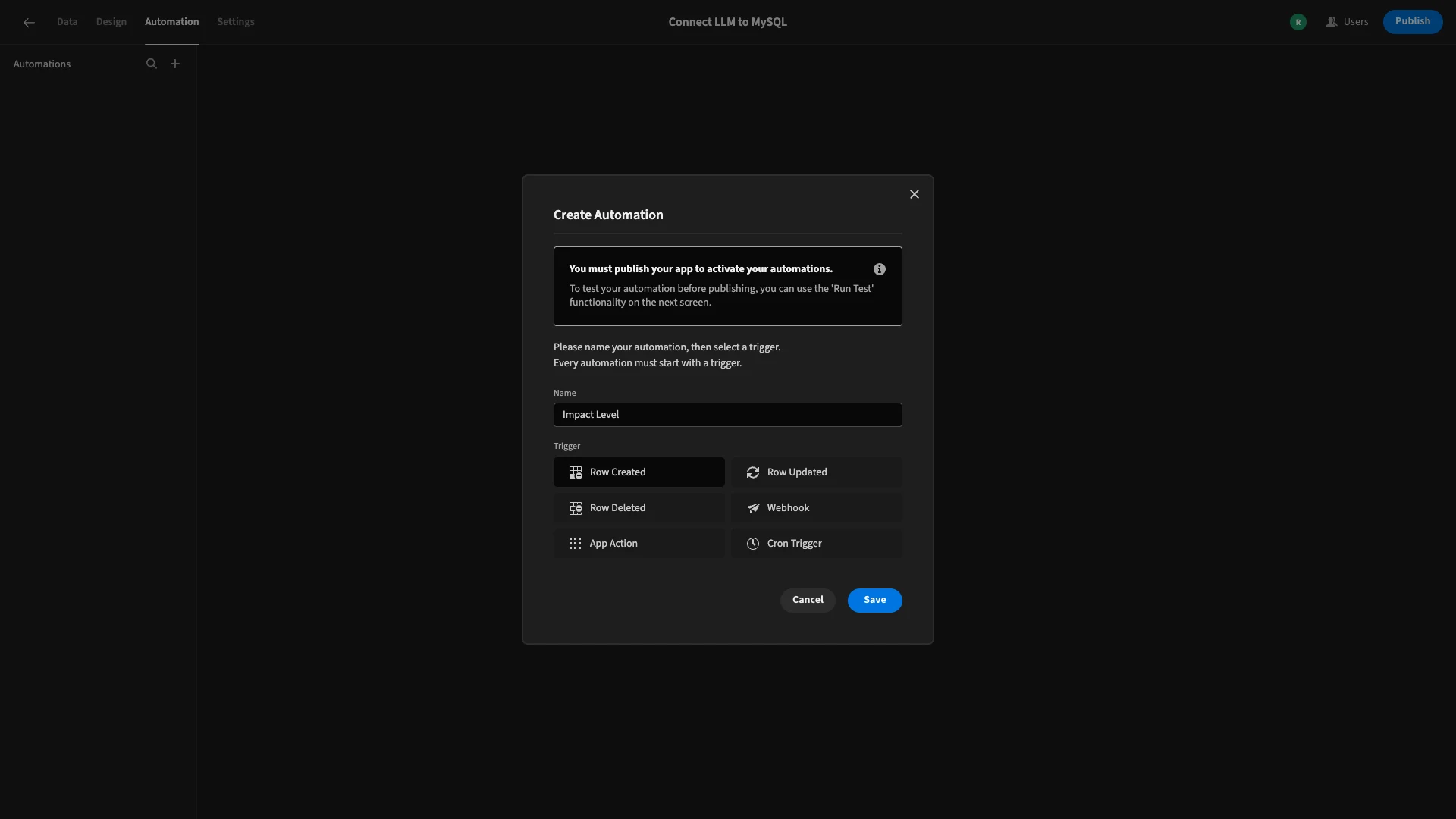

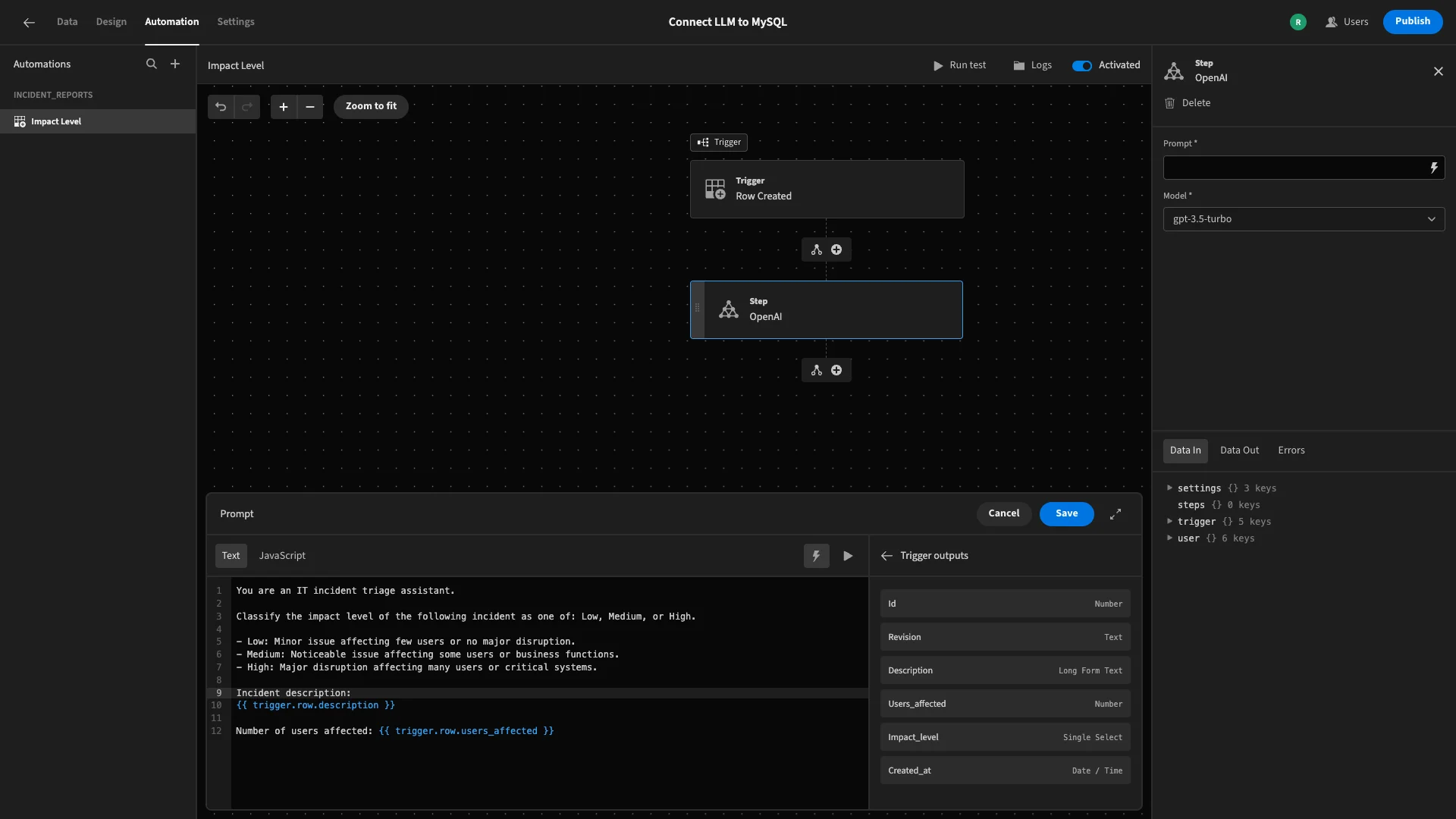

When we click on this, we鈥檙e prompted to give our new rule a name and choose a trigger. We鈥檒l call our rule Impact Level and select the Row Created trigger.

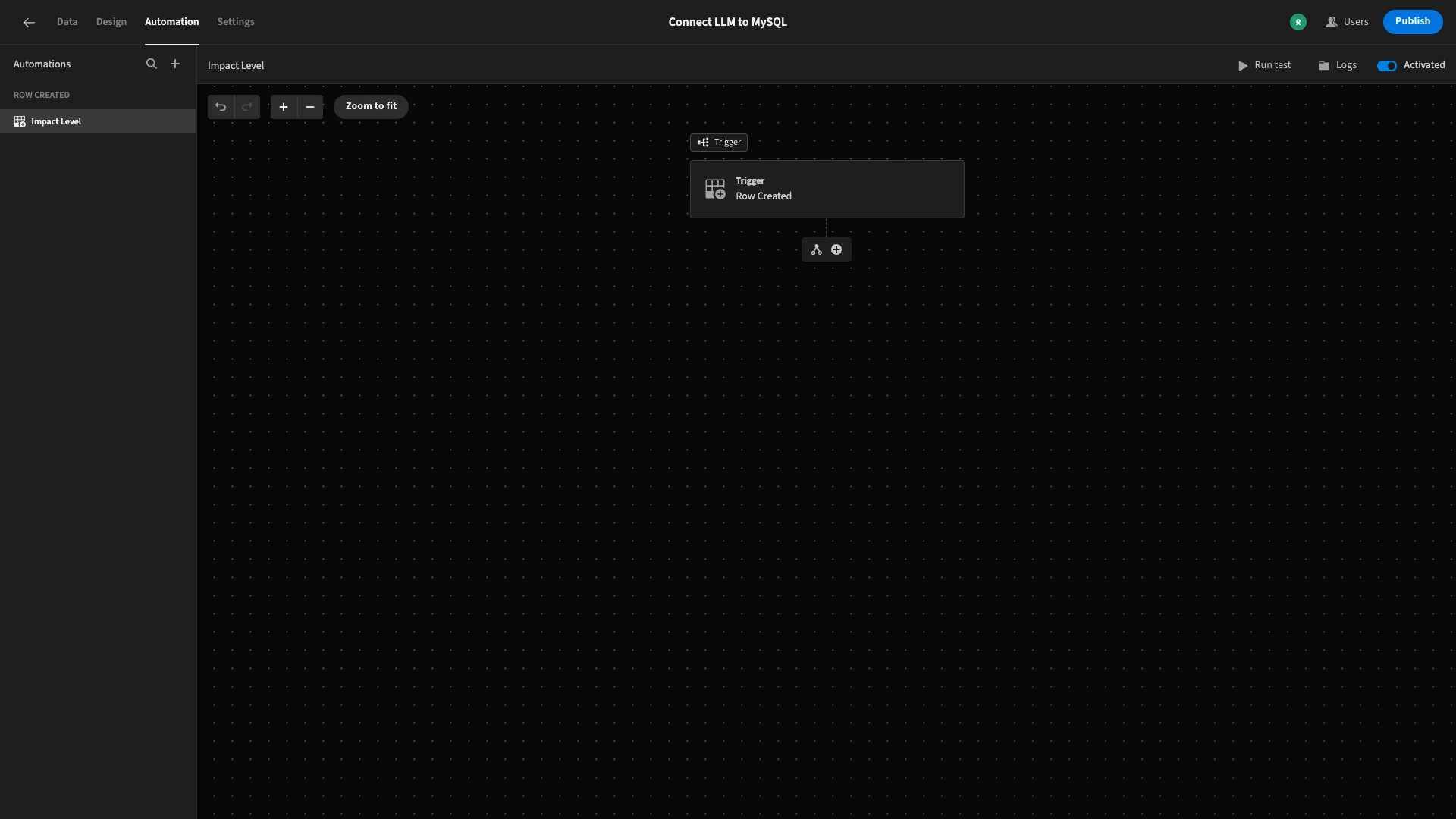

Here鈥檚 what this will look like.

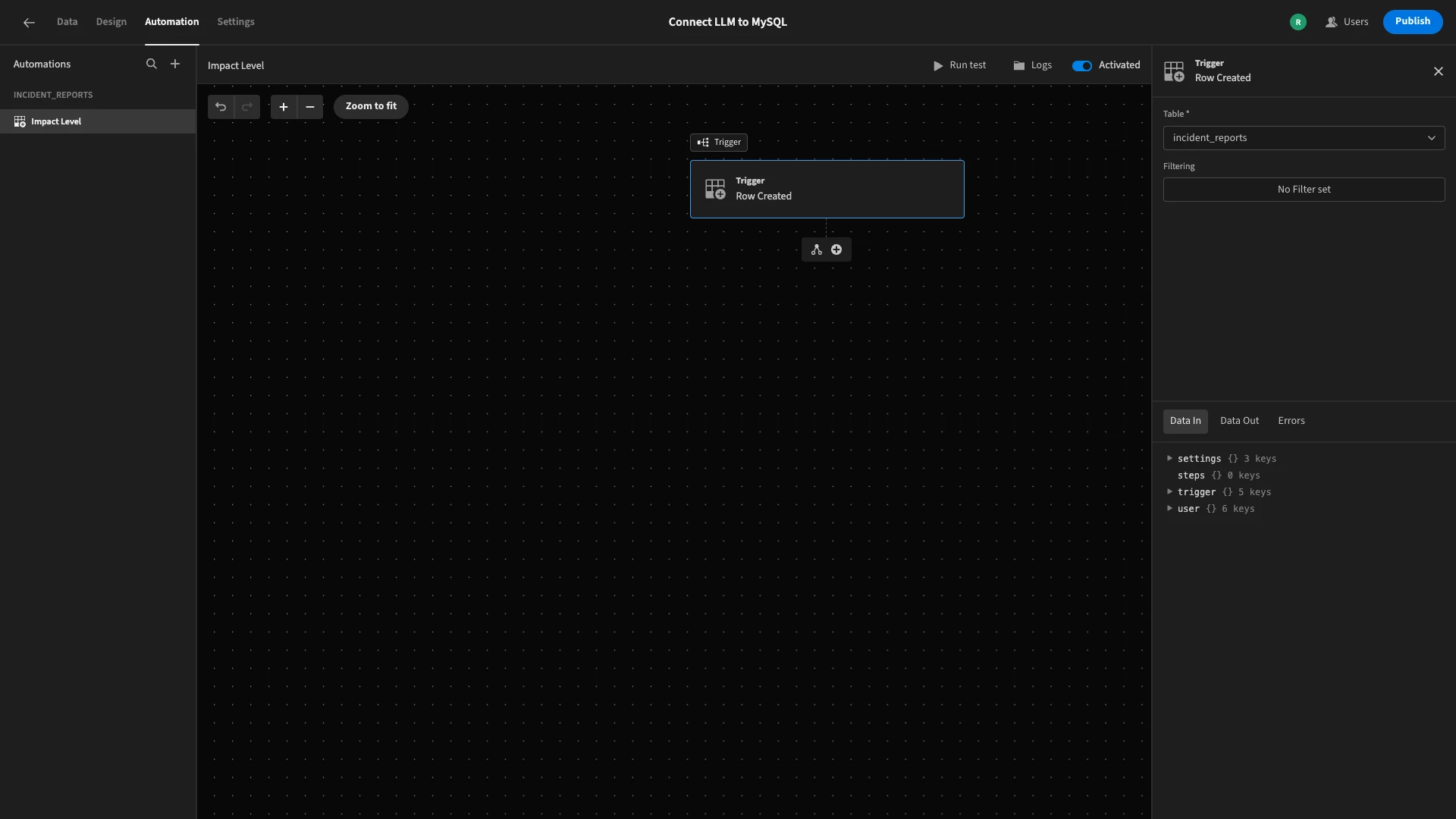

We鈥檒l click on our trigger to access its configuration options. Here, all we need to do is set the Table to our incident_reports table.

There鈥檚 also an option to add a filter, which would allow us to specify additional conditions for when our automation should fire, but today, we want it to execute any time a row is added, regardless of its contents.

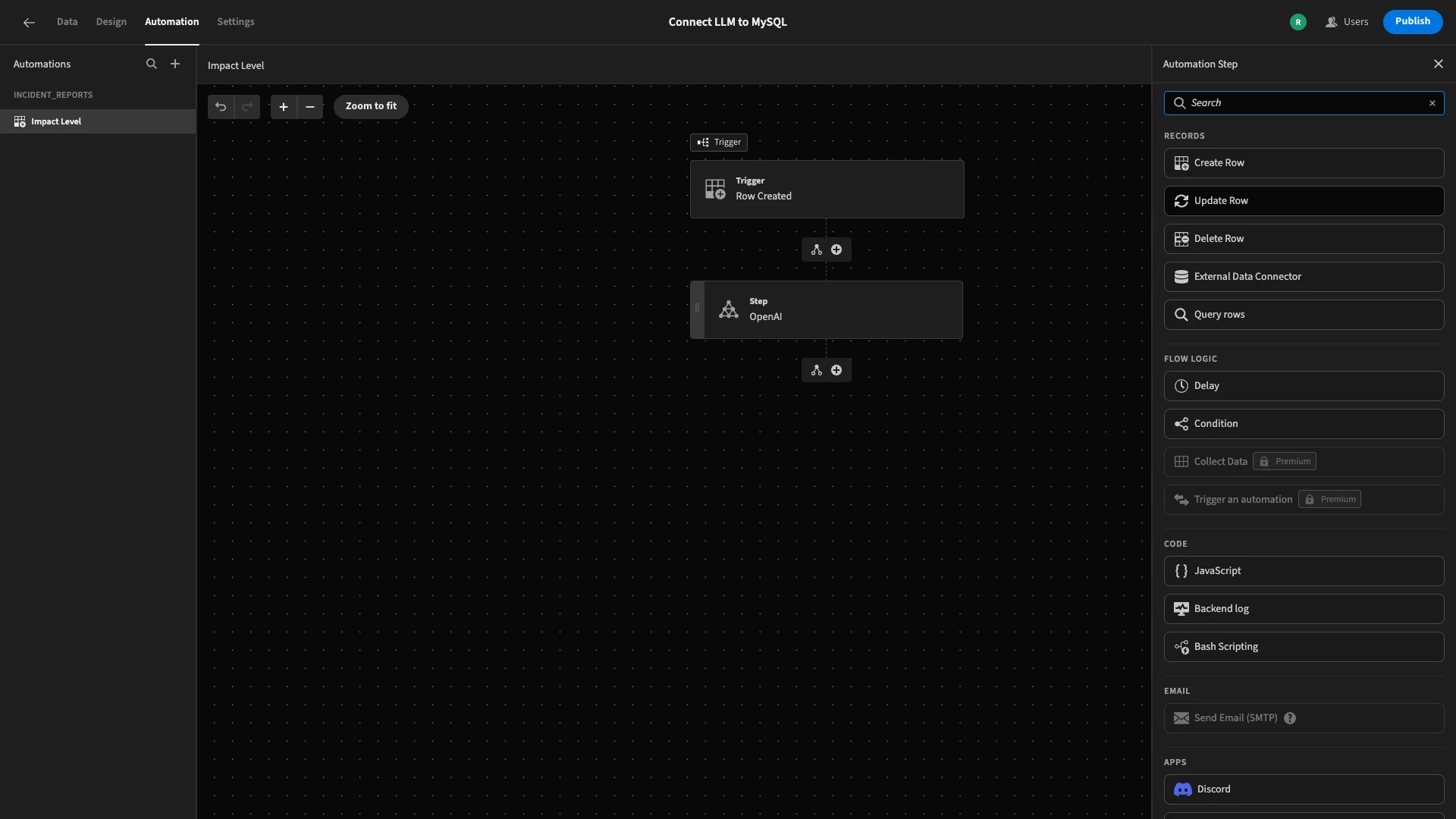

Now, we can start defining which actions should be taken in response to our trigger.

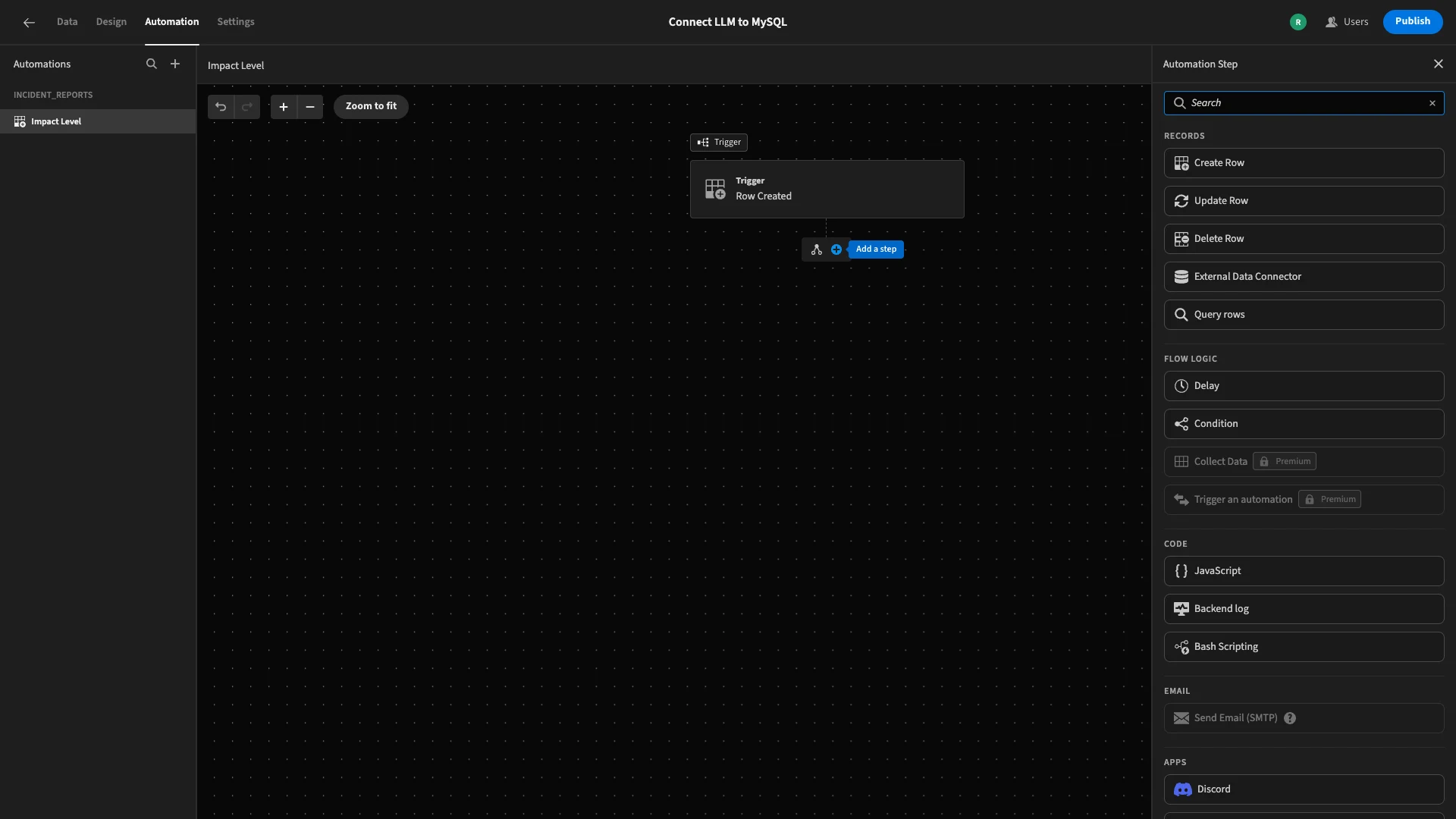

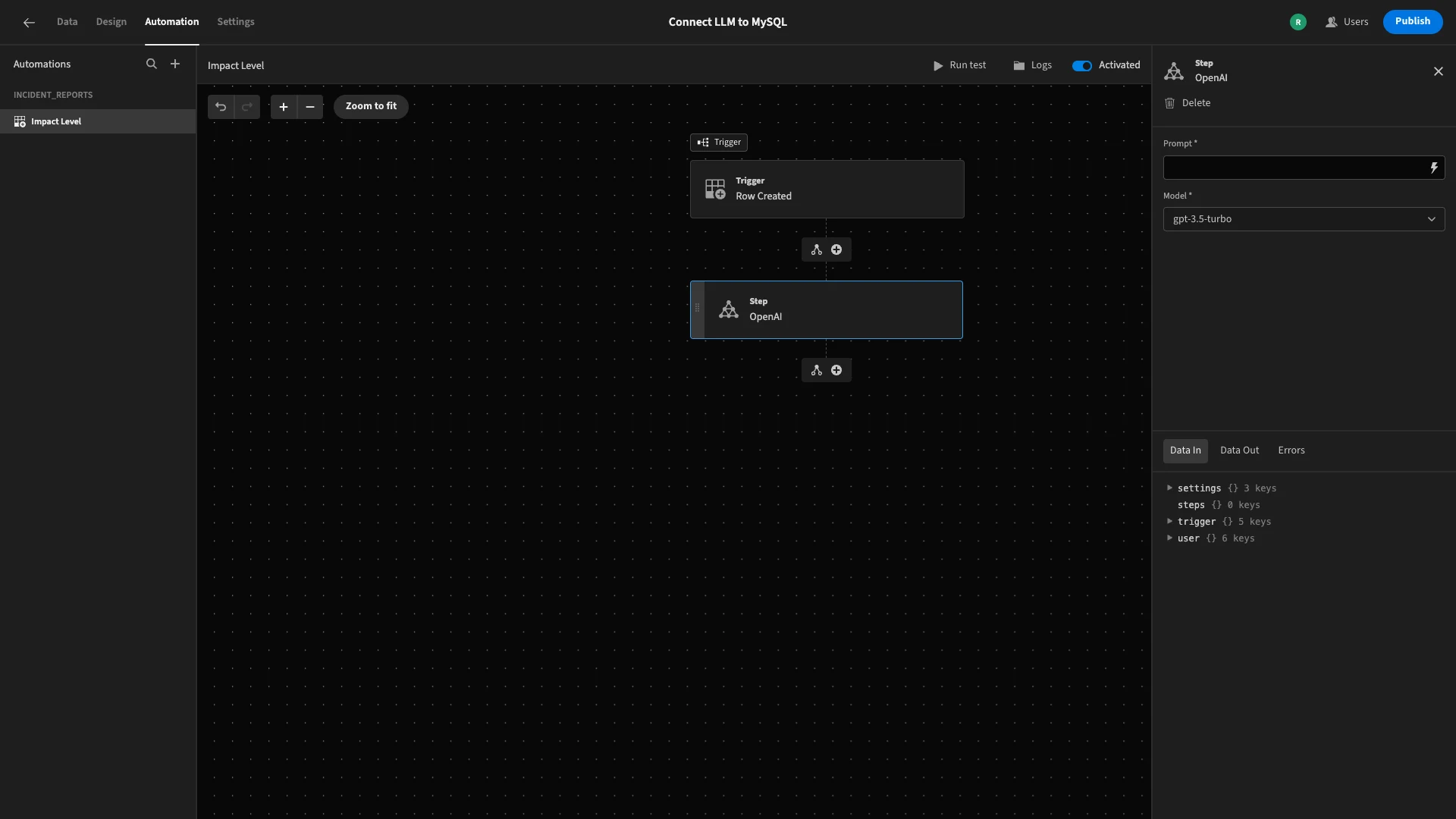

First, we鈥檒l hit the + icon to access a list of available automation actions.

At the bottom, we鈥檒l select OpenAI.

Again, we can access the settings for this in a side panel to the right of the screen.

Here, we can write our prompt and select a model. We鈥檙e sticking with GPT-3.5 Turbo.

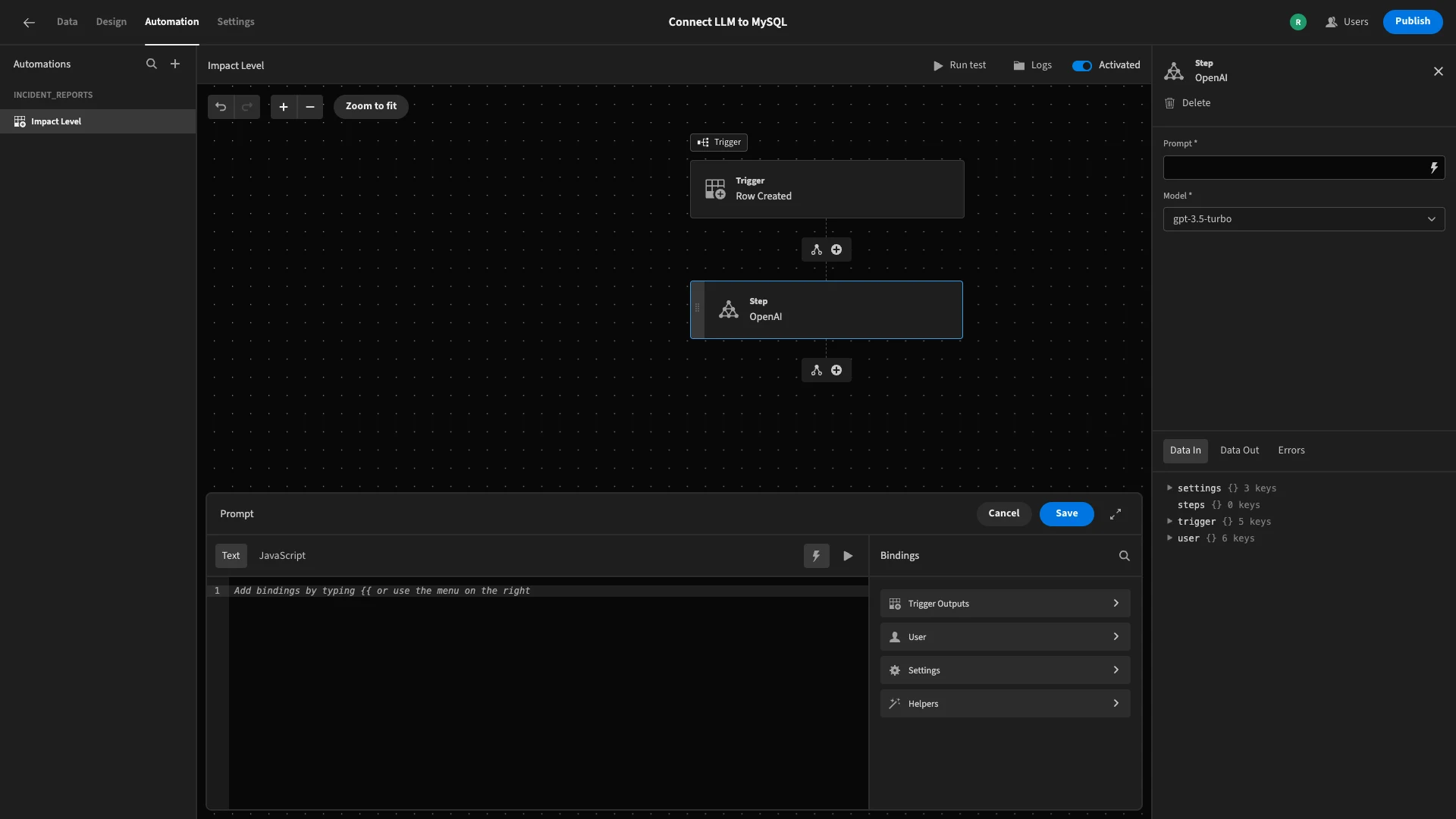

We鈥檒l hit the lightning bolt icon in the Prompt field to open the bindings drawer.

Our prompt will do three separate things.

- Determine the logic for how a value for the

impact_levelcolumn should be derived. - Provide values for the

descriptionandusers_affectedcolumns. - Specify the format we need our response to be returned in.

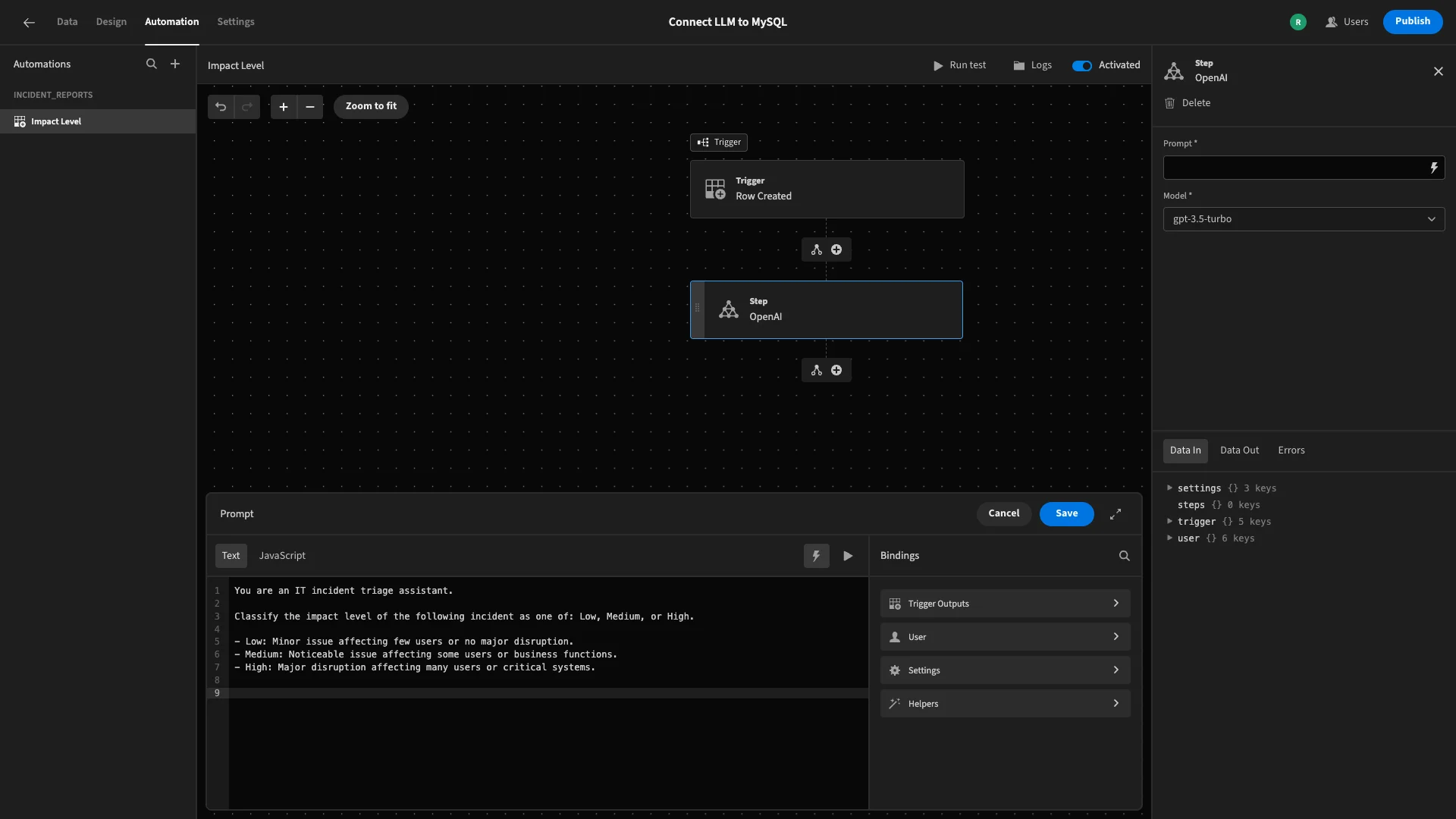

For demo purposes, we鈥檙e using the following basic logic to assess the submission, although you could modify this however you need to match your own workflow.

1You are an IT incident triage assistant.

2

3Classify the impact level of the following incident as one of: Low, Medium, or High.

4

5\- Low: Minor issue affecting few users or no major disruption.

6

7\- Medium: Noticeable issue affecting some users or business functions.

8

9\- High: Major disruption affecting many users or critical systems.

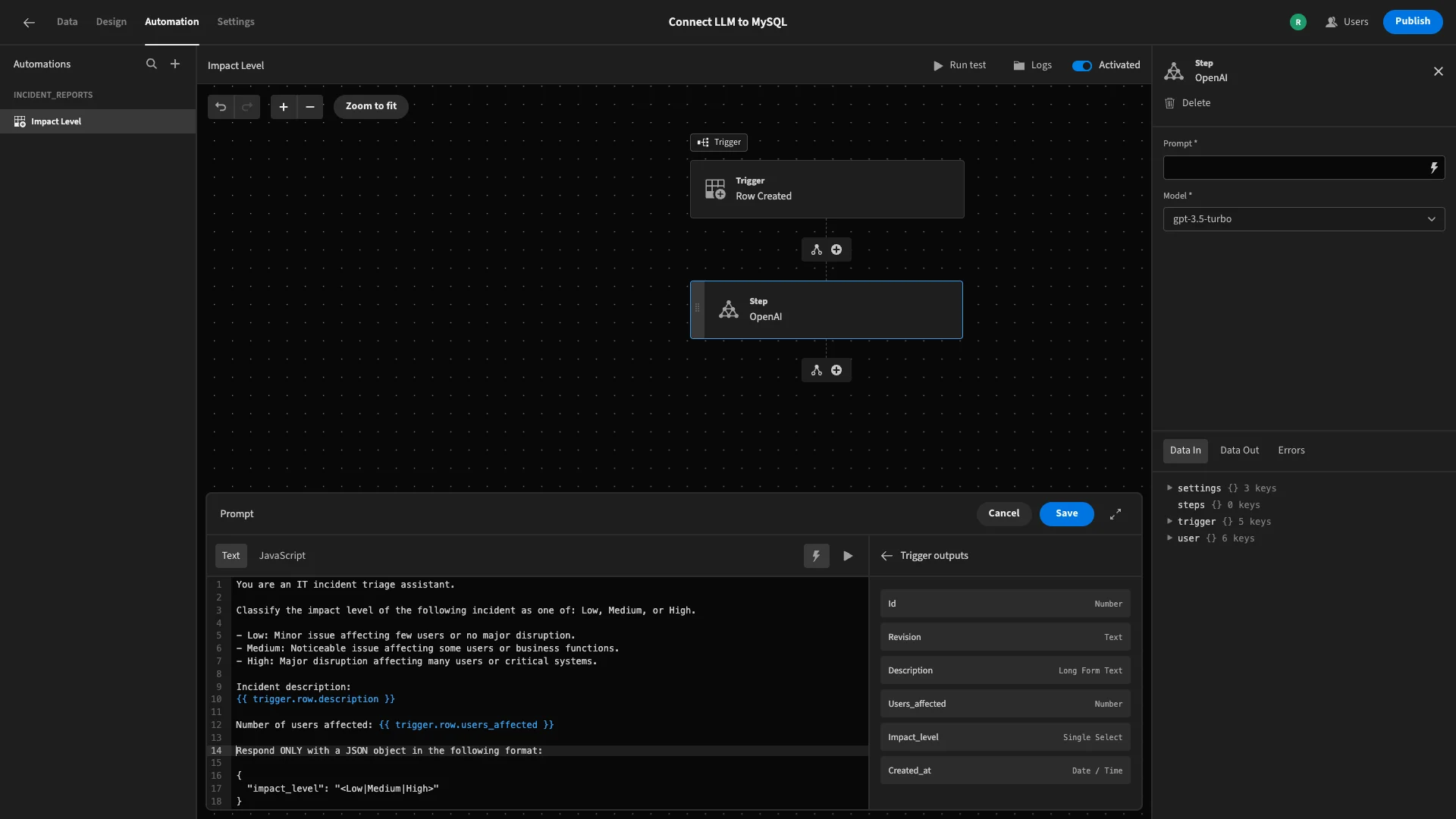

We can access the values we need from our trigger row under Trigger Outputs. We鈥檒l add the following to our prompt to signpost these for the model.

1Incident description:

2

3{{ trigger.row.description }}

4

5Number of users affected: {{ trigger.row.users_affected }}

Lastly, we鈥檒l provide an example of the JSON object we鈥檇 like returned.

1Respond ONLY with a JSON object in the following format:

2

3{

4

5 "impact_level": "<Low|Medium|High>"

6

7}

To recap, here鈥檚 the final prompt.

1You are an IT incident triage assistant.

2

3Classify the impact level of the following incident as one of: Low, Medium, or High.

4

5\- Low: Minor issue affecting few users or no major disruption.

6

7\- Medium: Noticeable issue affecting some users or business functions.

8

9\- High: Major disruption affecting many users or critical systems.

10

11Incident description:

12

13{{ trigger.row.description }}

14

15Number of users affected: {{ trigger.row.users_affected }}

16

17Respond ONLY with a JSON object in the following format:

18

19{

20

21 "impact_level": "<Low|Medium|High>"

22

23}We鈥檒l finish by hitting Save.

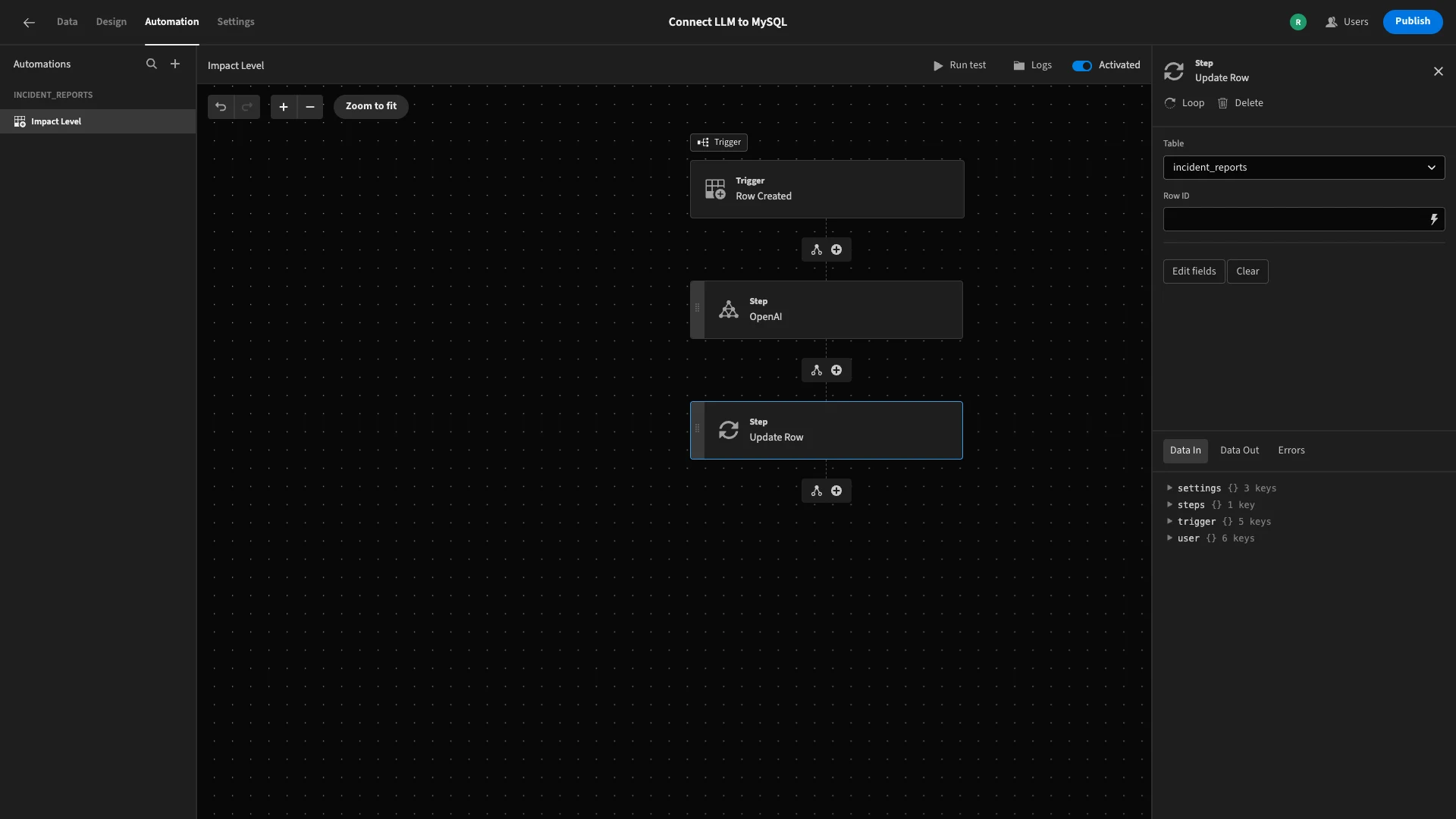

The last thing we need to do is take our LLM鈥檚 response, and use it to populate the impace_level attribute in our trigger row.

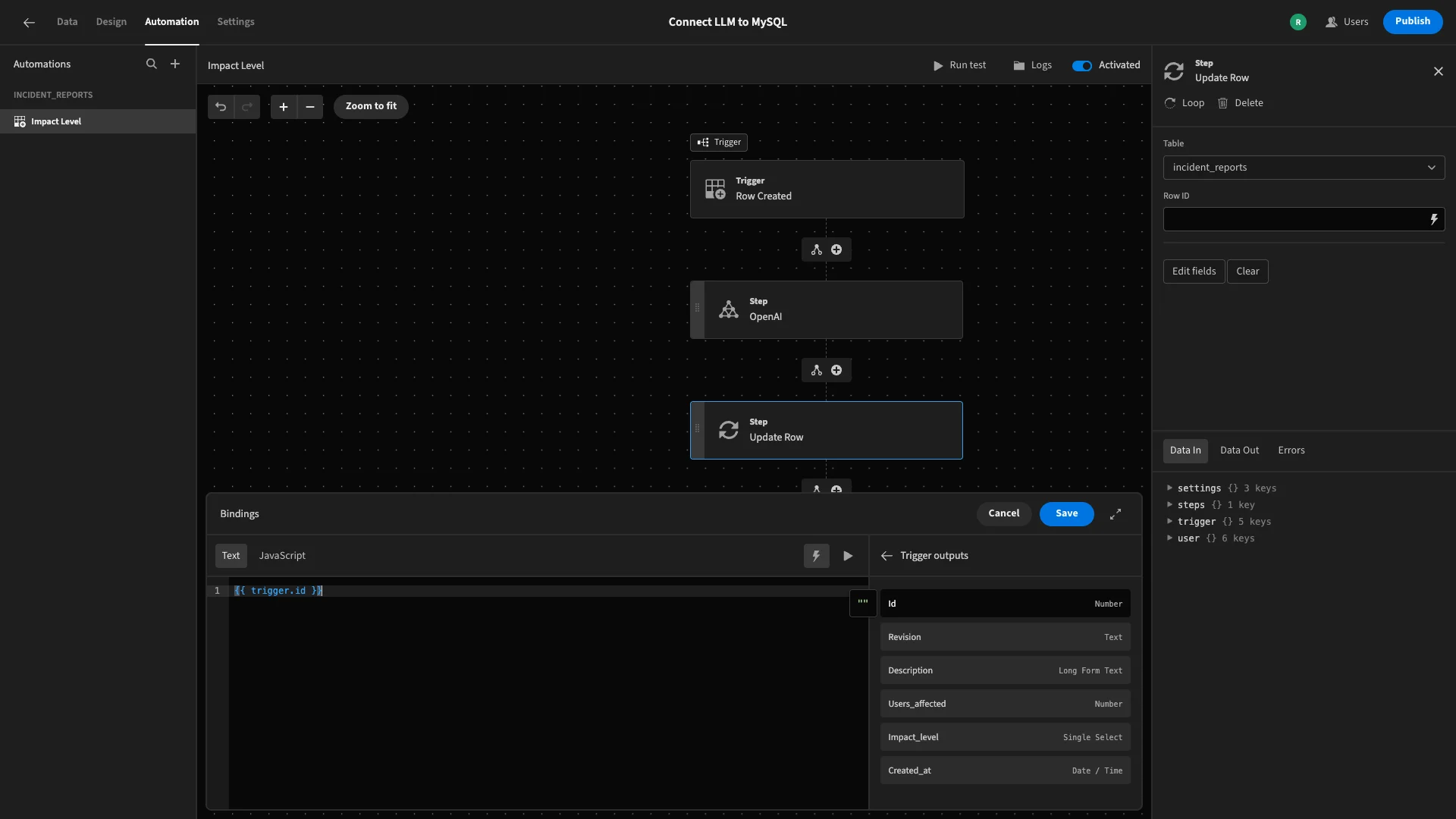

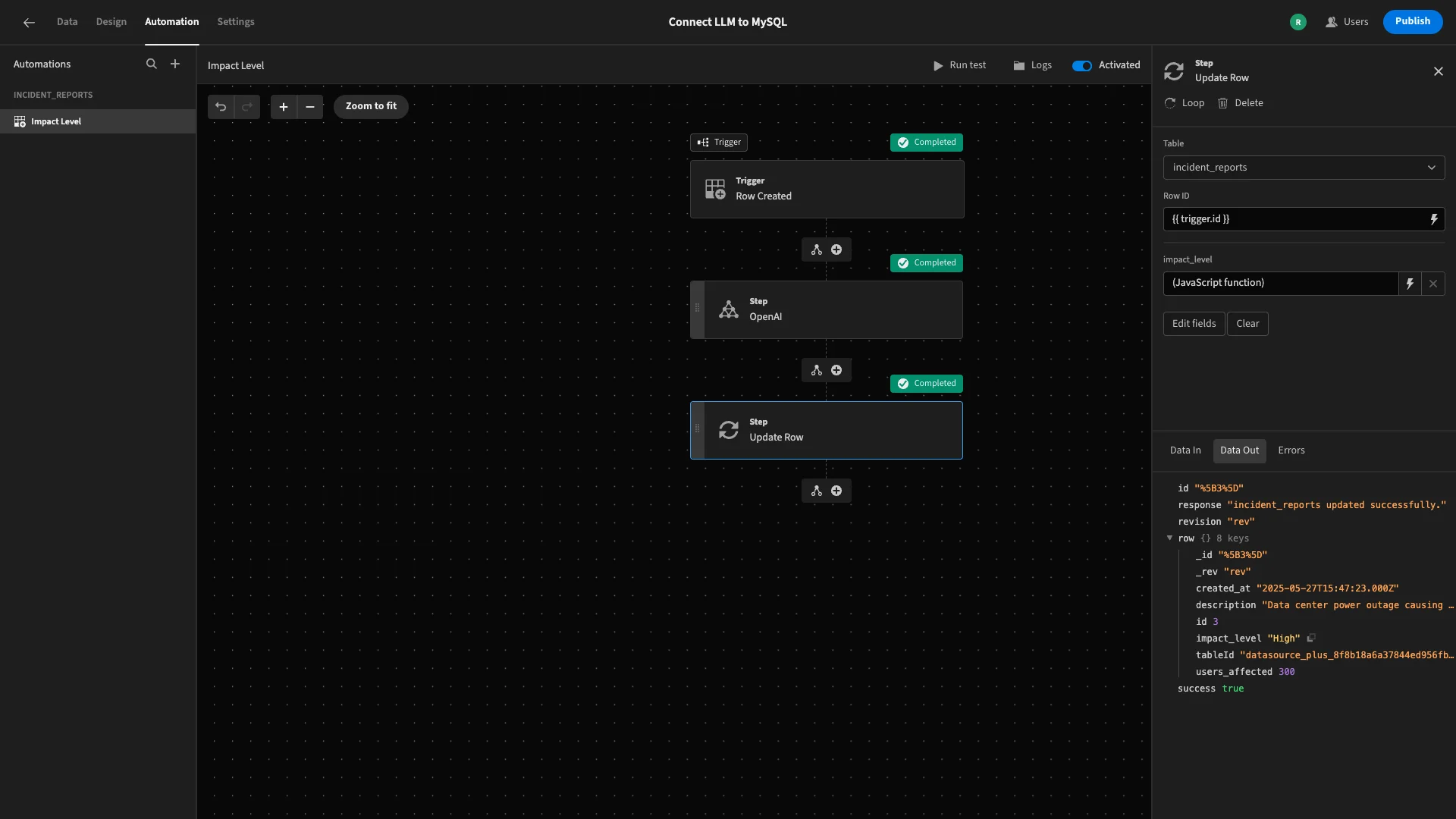

We鈥檒l achieve this by adding a new action step, this time selecting Update Row.

As before, we鈥檒l select incident_reports as our table.

This action step also has a setting called Row ID. This enables us to specify the particular row in our database that will be updated.

As we know, we want to target the original row that triggered our automation run.

To do this, we鈥檒l bind our Row ID to {{ trigger.id }}.

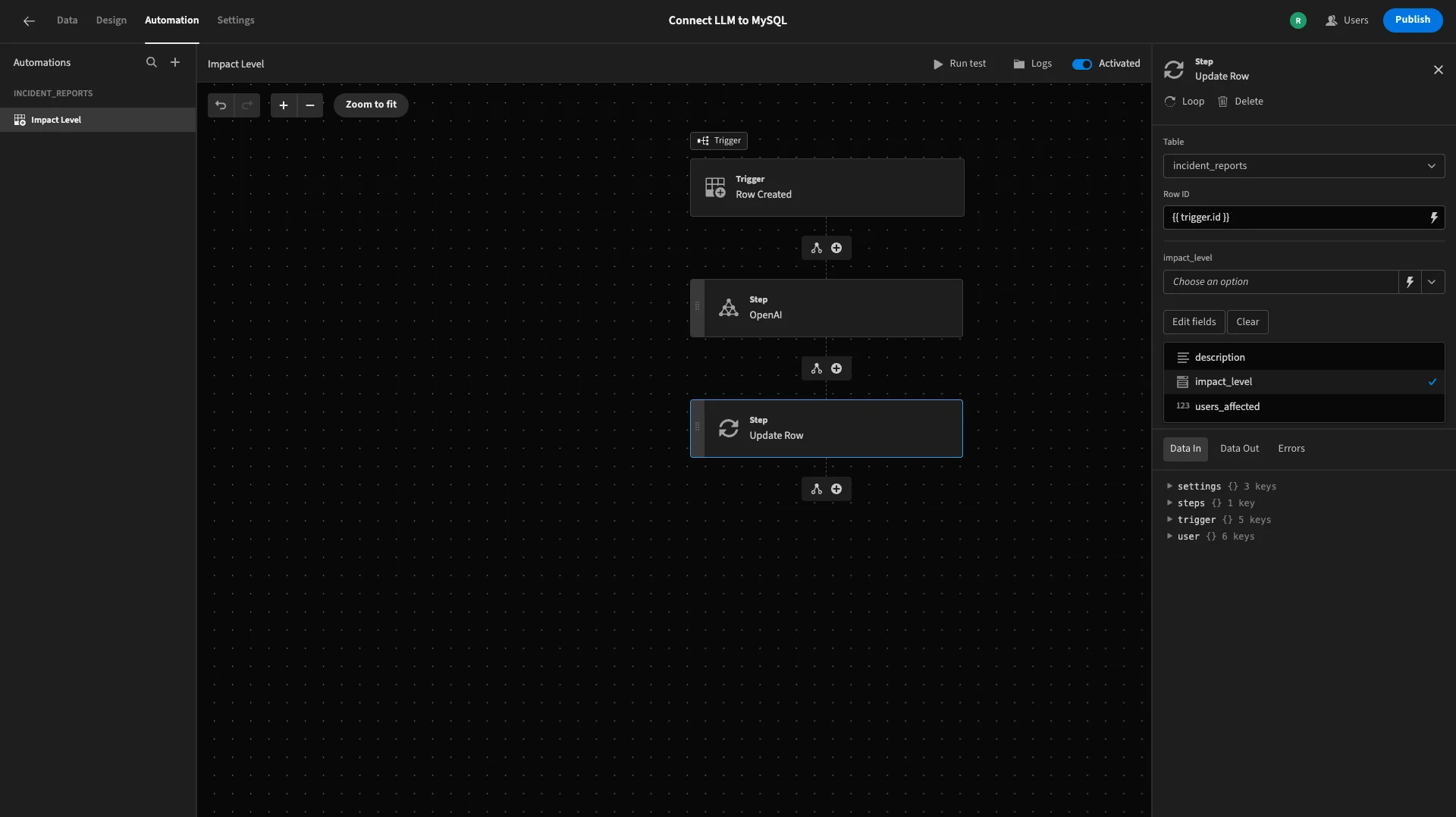

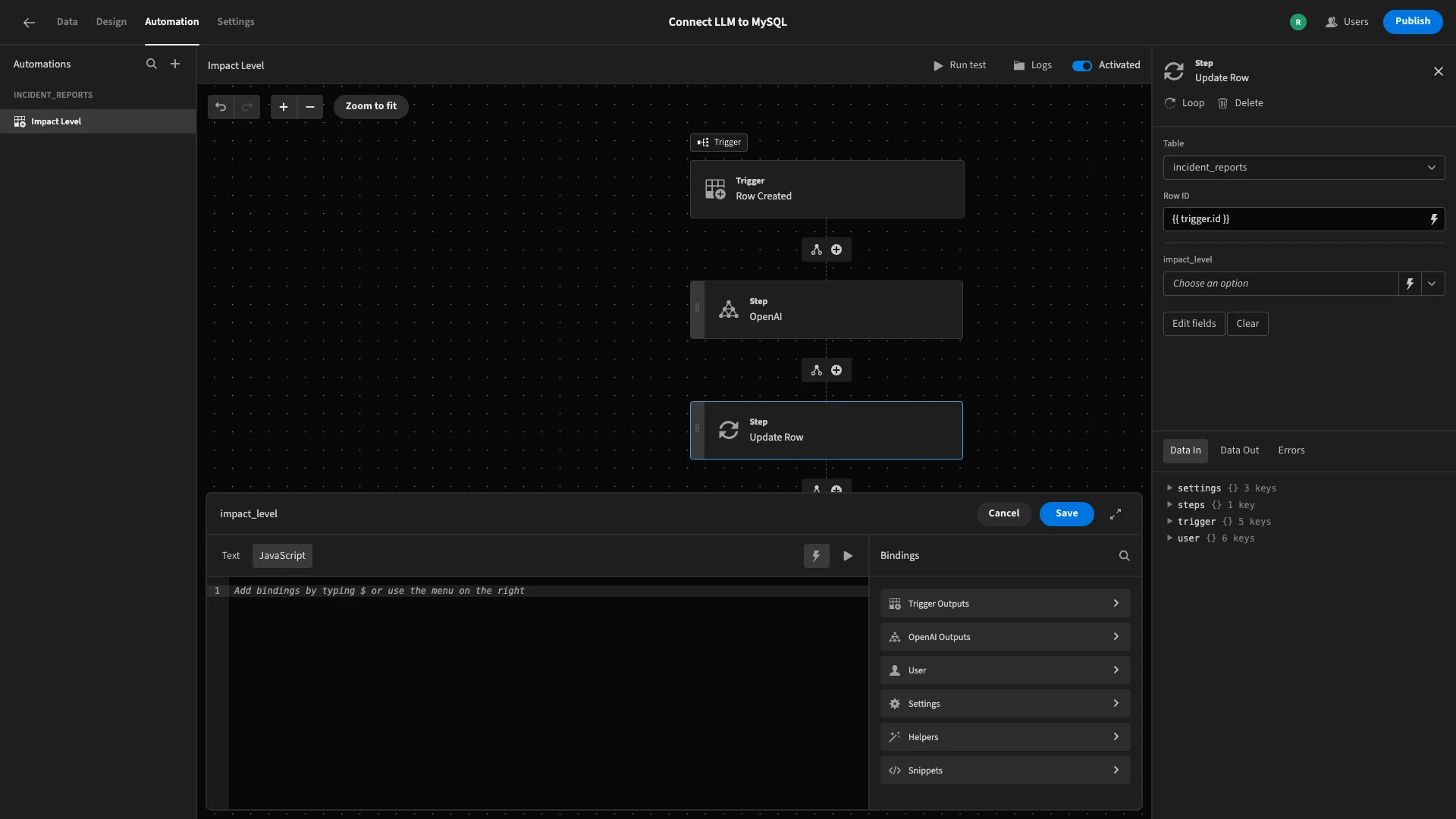

Then, we鈥檒l hit edit fields and select `impact_level.

We want to bind this to the value that our LLM determined. However, there鈥檚 an additional challenge here, in the sense that the value we need is wrapped in a JSON object.

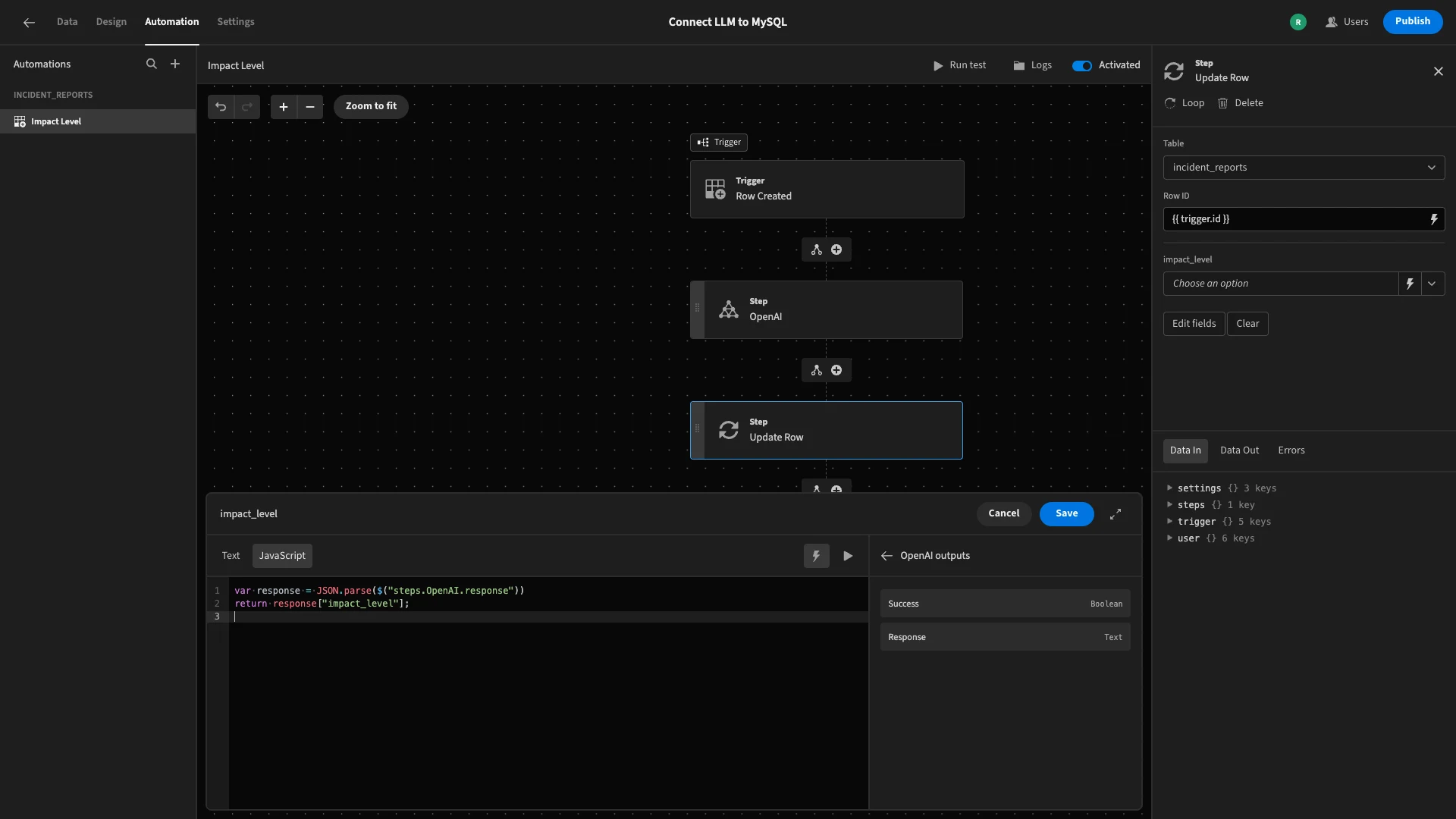

So, we鈥檒l start by opening the bindings drawer, but this time we鈥檒l select the JavaScript editor.

We鈥檙e going to declare a variable called response, and use the JSON.parse() method to set its value to the output of our OpenAI step. We鈥檒l then return the impact_level value within this.

We鈥檒l do this with the following code.

1var response = JSON.parse($("steps.OpenAI.response"))

2

3return response["impact_level"];

And we鈥檒l hit save.

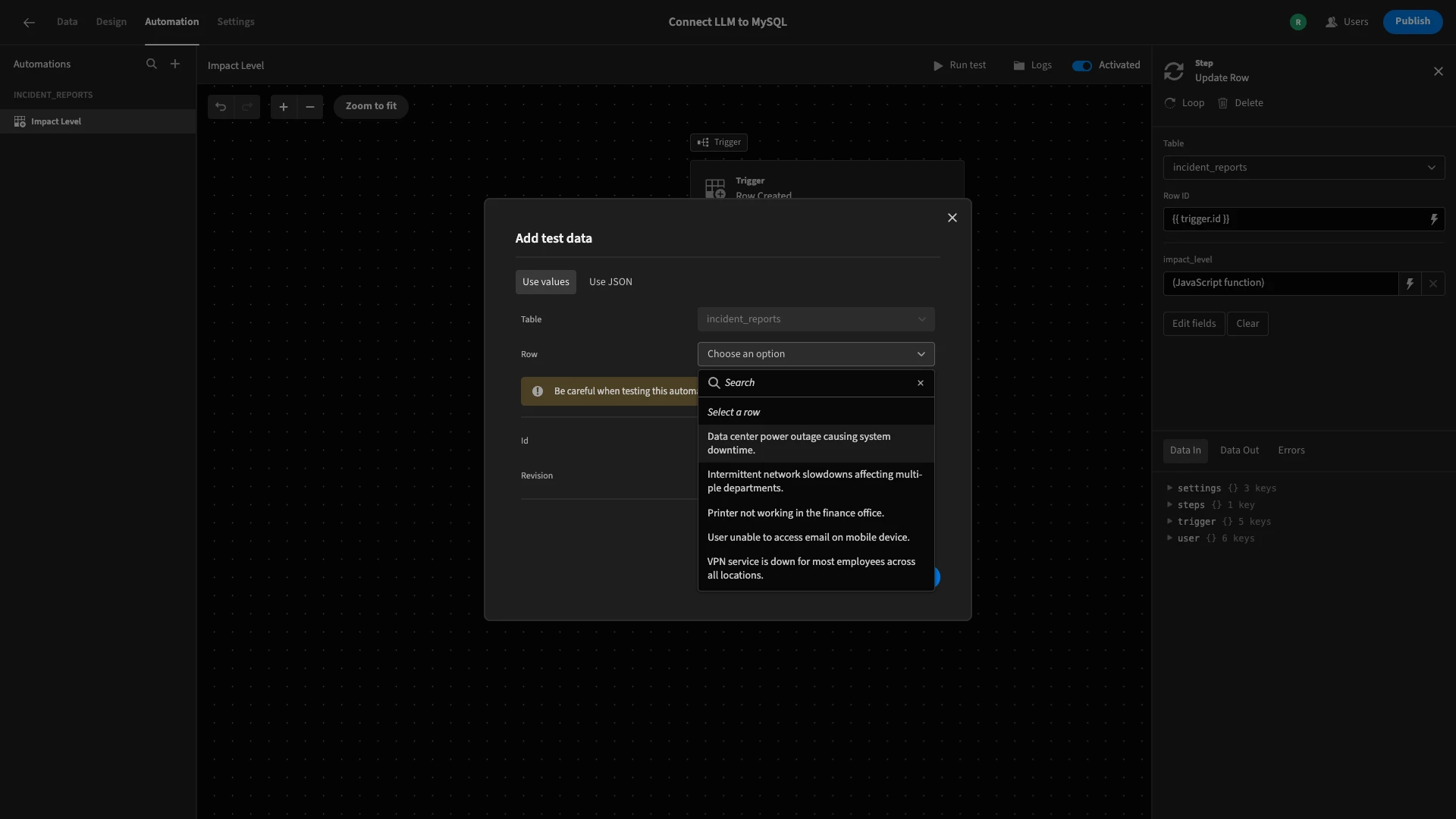

To finish, we can hit Run Test to verify everything works as we expect it to.

When we do this, we can choose an existing row to use as our test data.

In our test details, we can see that the automation has successfully populated an impact_level for our row.

4. Creating an incident report form UI

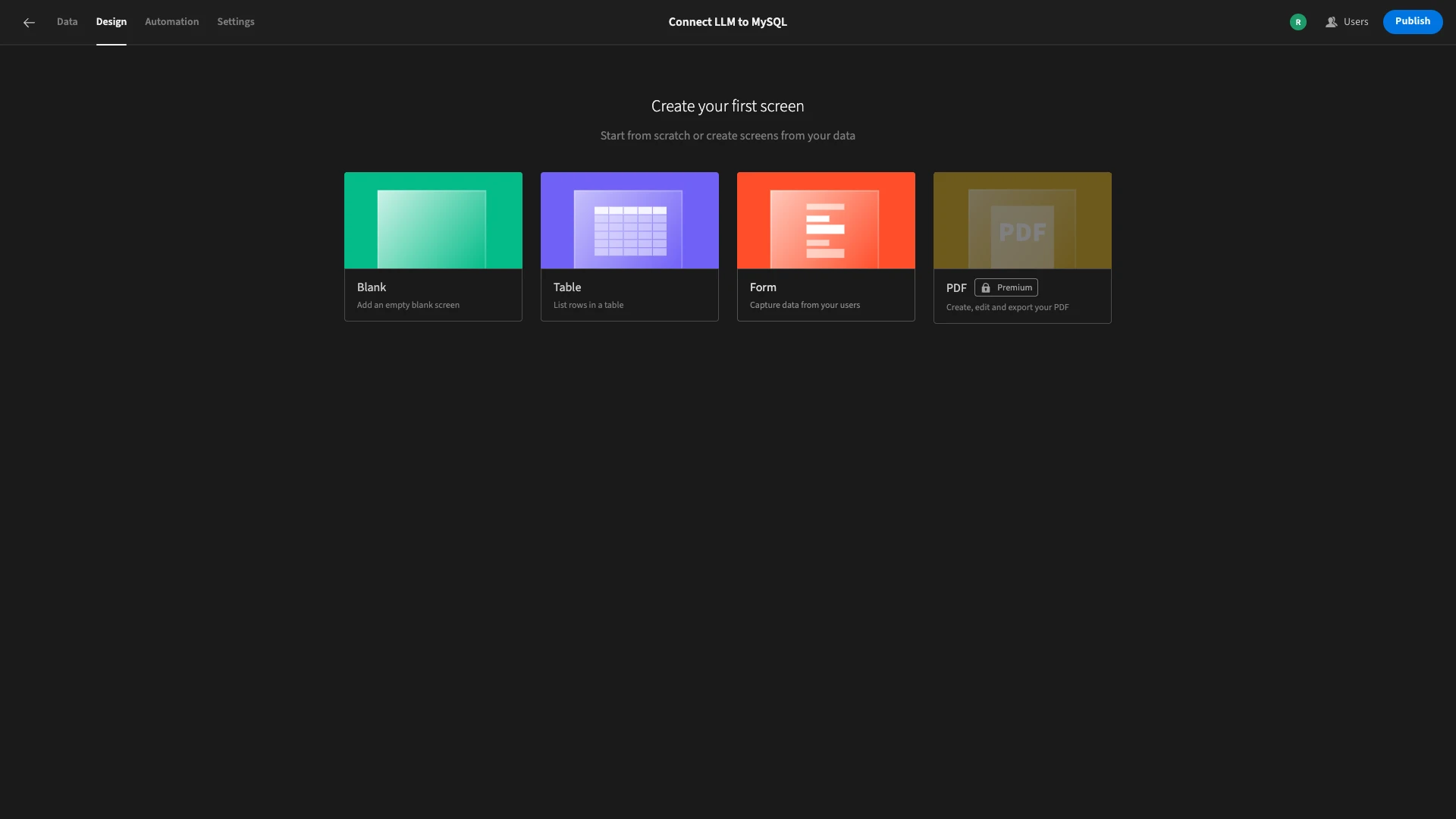

With our automation logic completed, we can move on to building a UI for our end users to report incidents. With 大象传媒, we can autogenerate customizable UIs based on connected data sources.

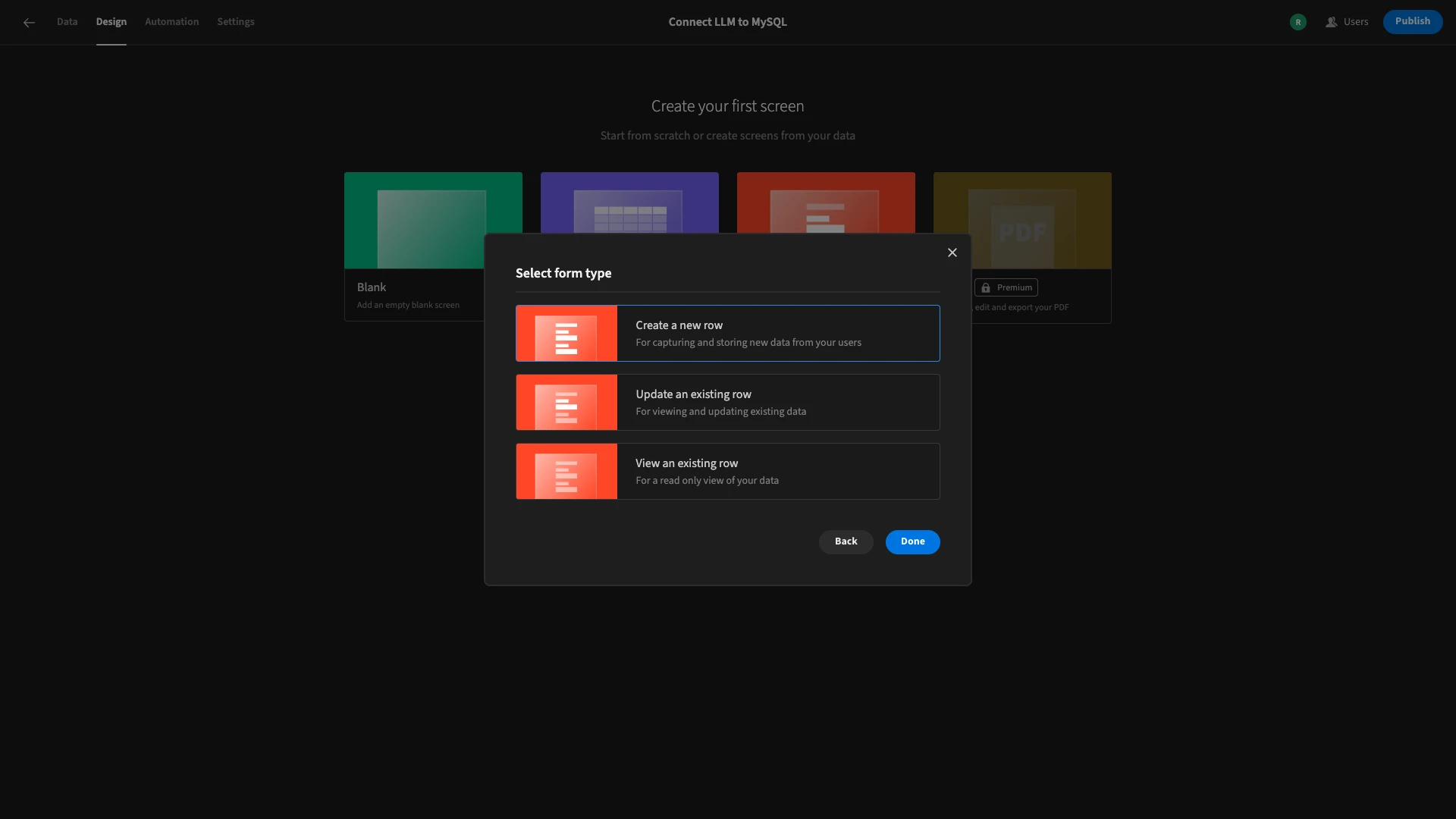

We鈥檒l start by heading to the Design section. Here, we鈥檙e offered a choice of several layouts.

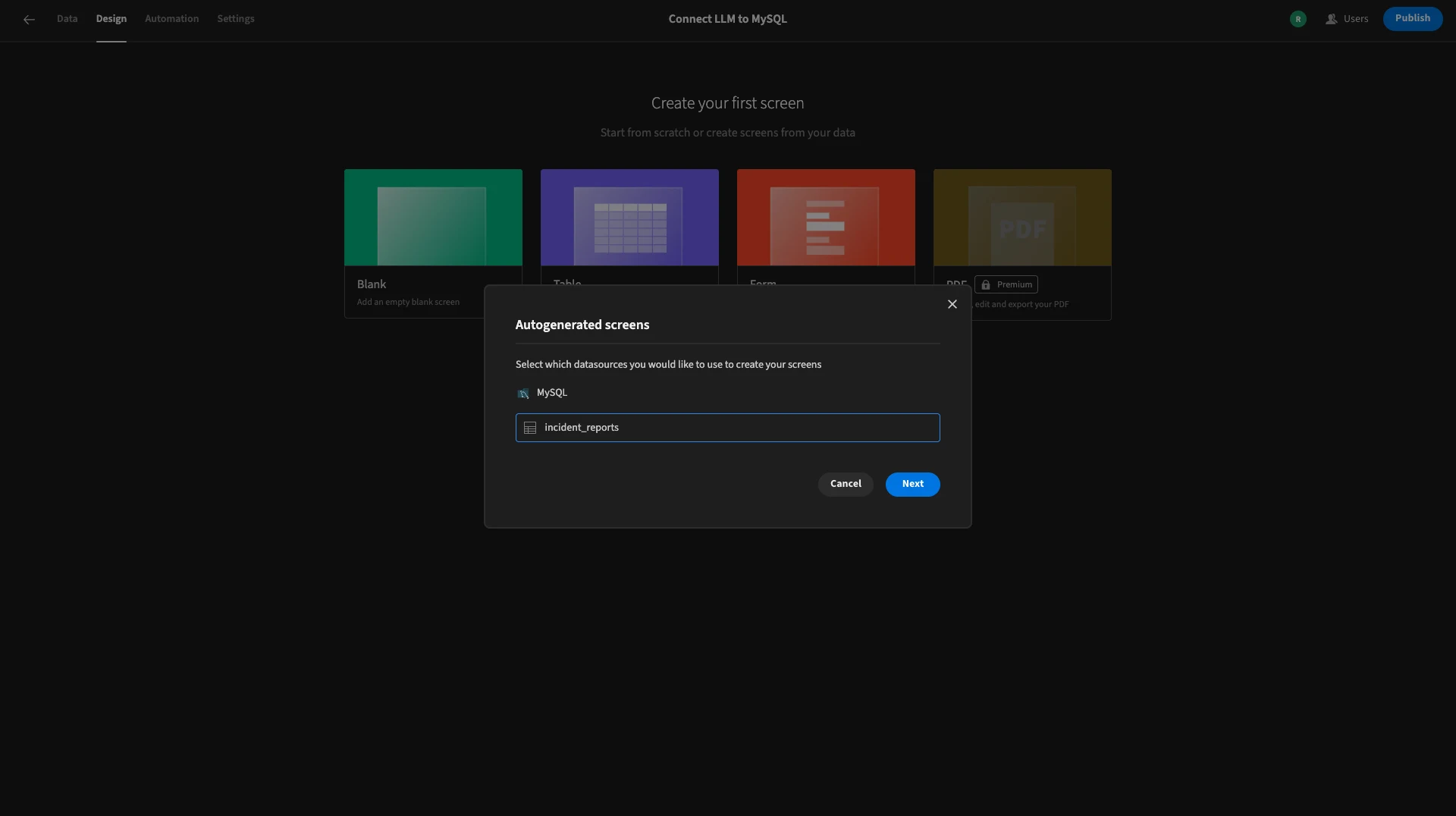

We can then choose which table we want to base this on.

We also need to choose which database operation our form will perform. We want a form that will create a new row.

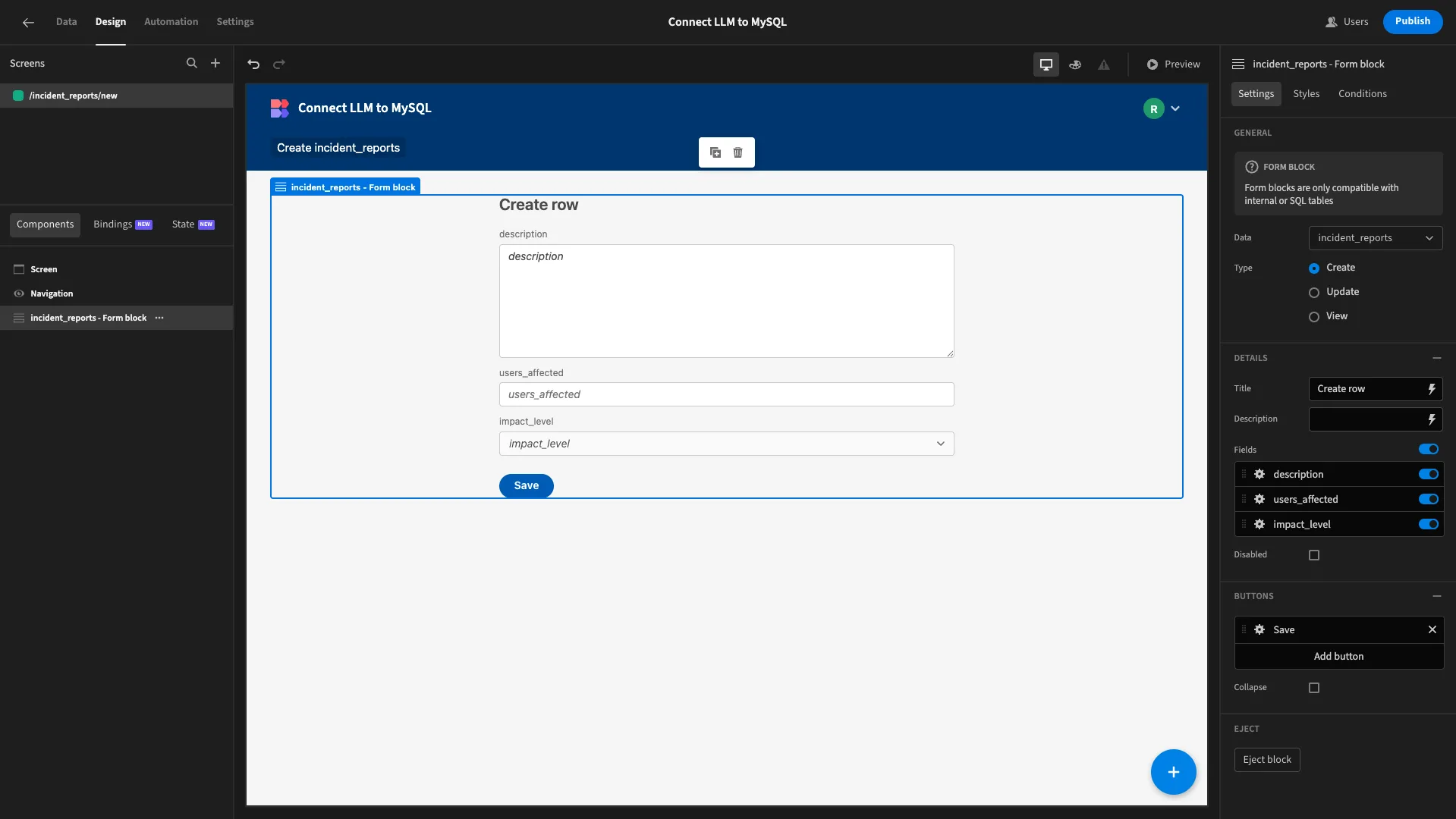

Here鈥檚 how this will look.

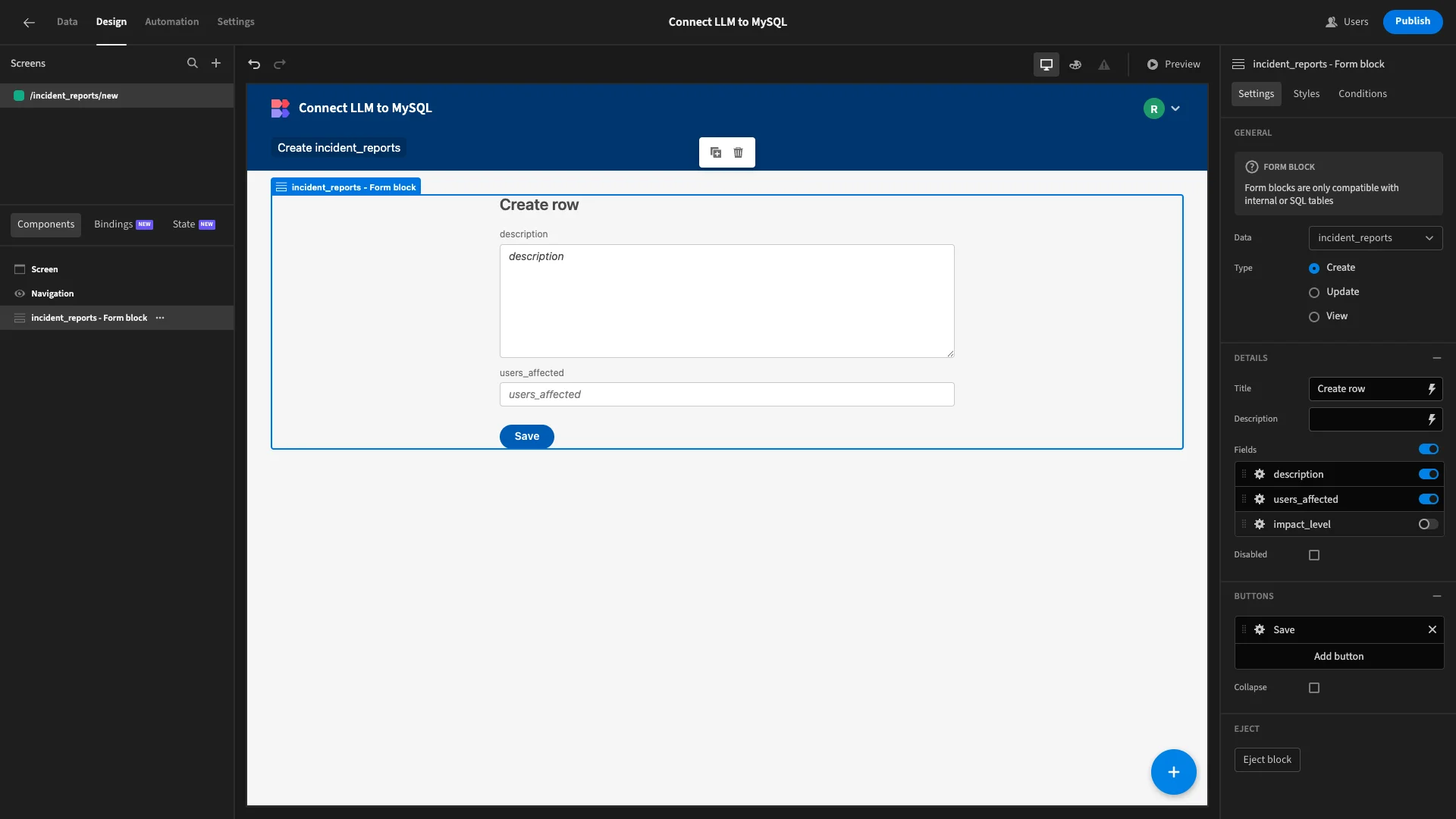

The first change we鈥檒l make is to remove the impact_level field, as we don鈥檛 need users to provide this manually.

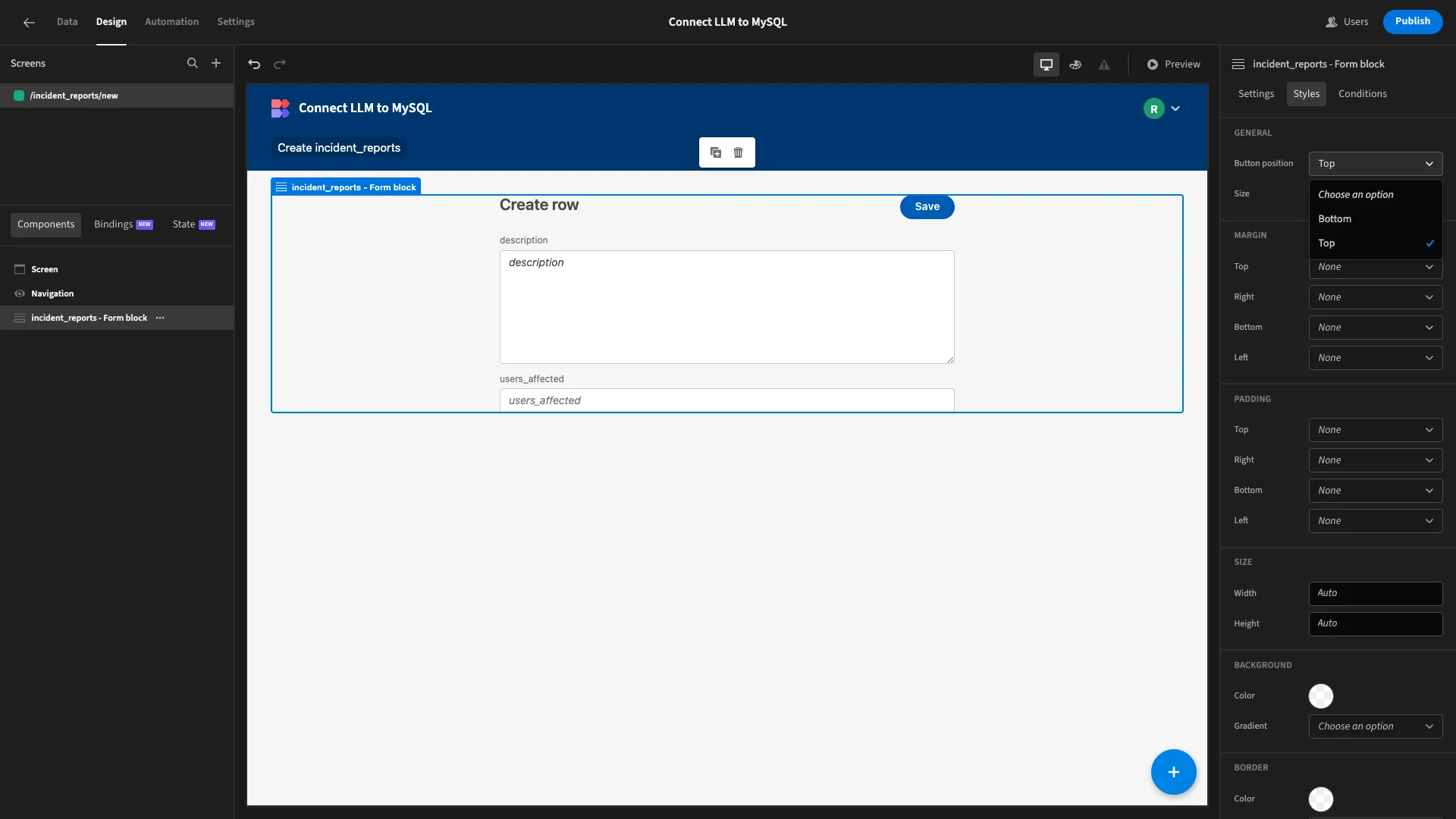

Under Styles, we鈥檒l set our Button Position to Top.

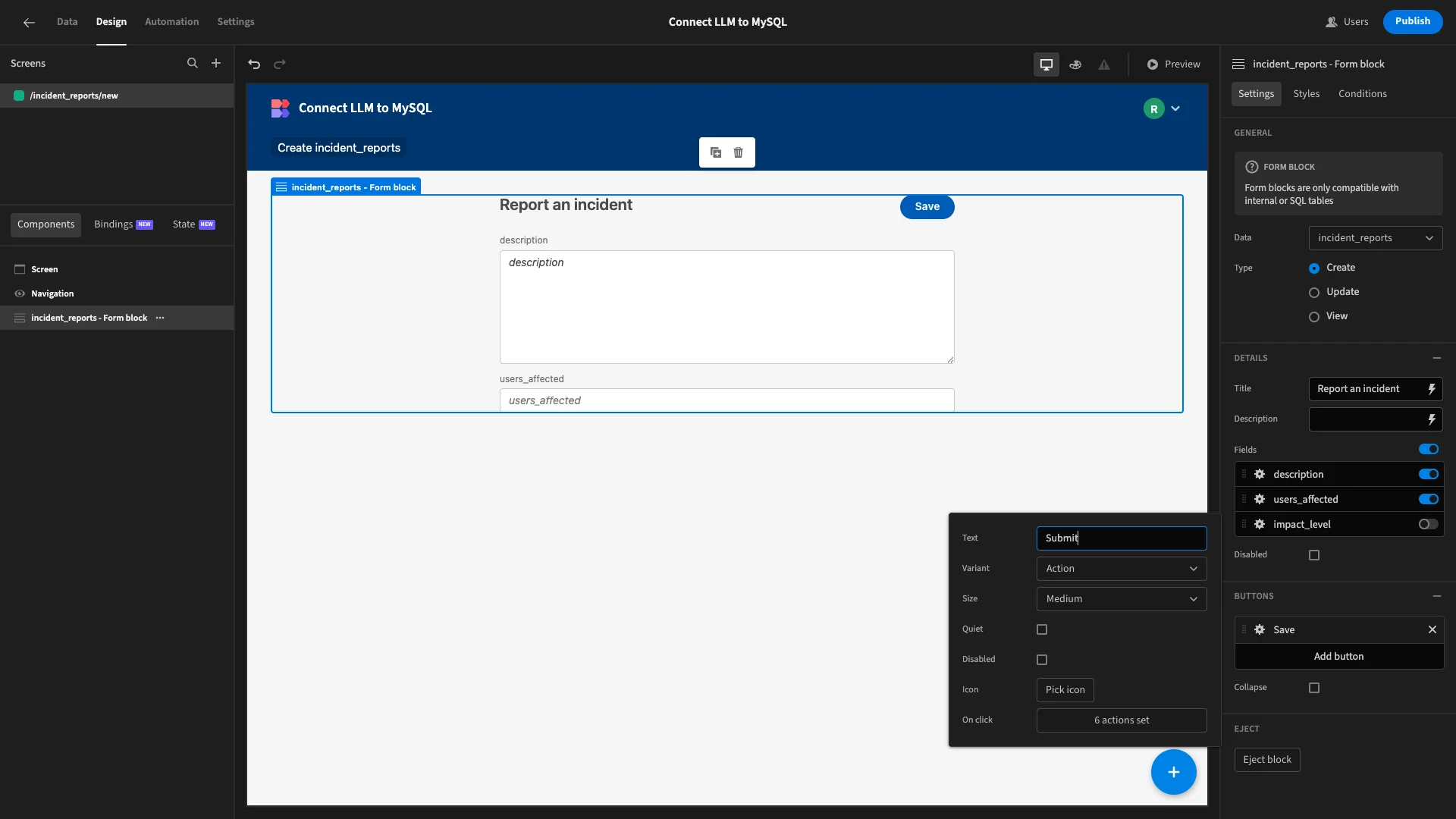

We鈥檒l also make a few adjustments to our display text to make things a bit more user-friendly. Firstly, we鈥檒l update our Title and Button Text.

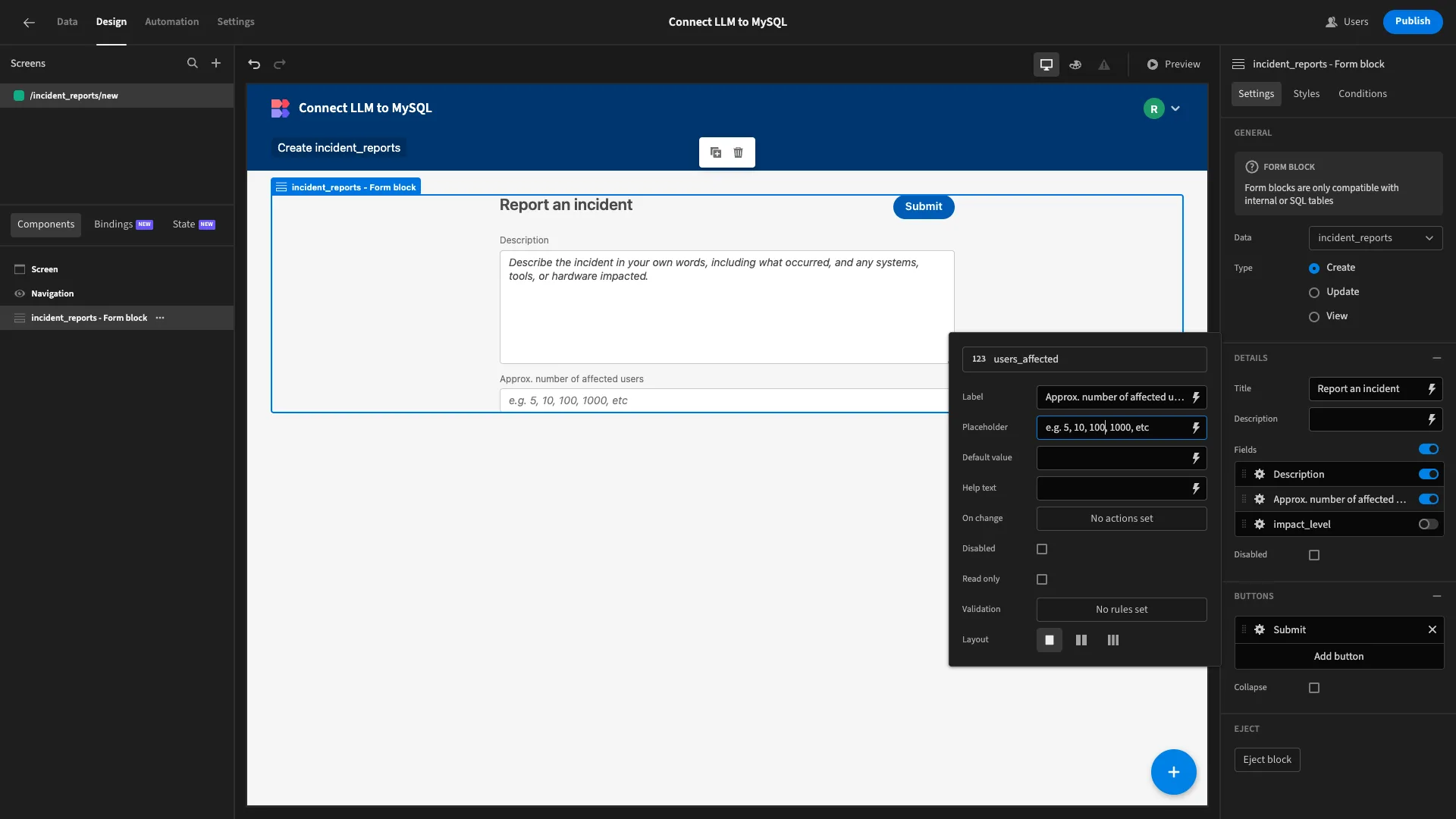

We鈥檙e also going to update the Label and Placeholder settings for each of our fields to better reflect the information we need users to provide.

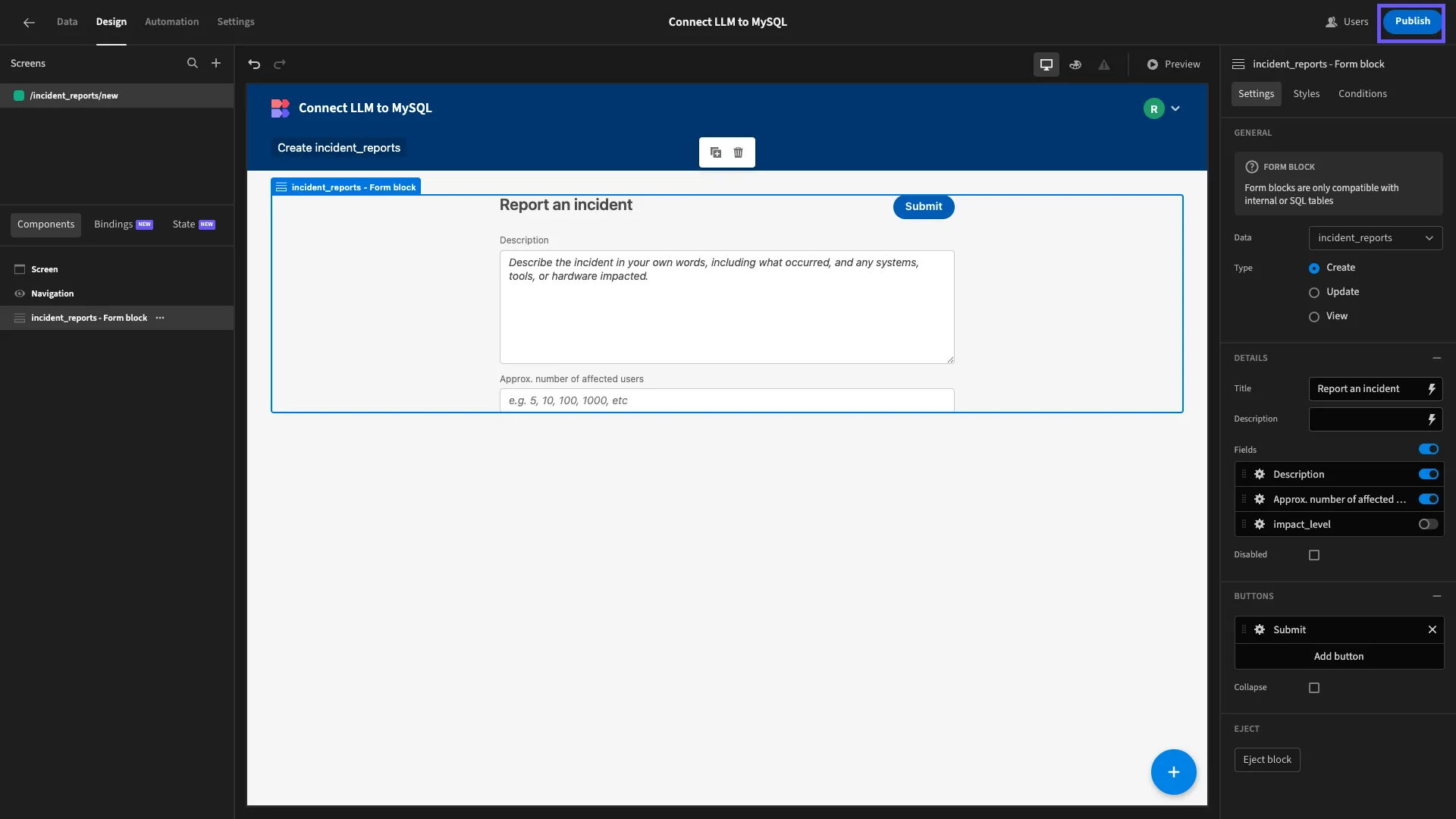

When we鈥檙e satisfied, we can hit Publish to push our app live.

Turn data into action with 大象传媒

大象传媒 is the open-source, low-code platform that empowers IT teams to turn data into action.

We offer leading connectivity for a huge range of LLMs, RDBMSs, NoSQL tools, APIs, and more, alongside autogenerated UIs, powerful visual automations, free SSO, option self-hosting, and custom RBAC.

There鈥檚 never been a better way to ship secure, performant workflow tools at pace. Take a look at our features overview to learn more.